© 2017 The original authors.

1. Target Audience

This document is a guide to the setup, administration, and configuration of WildFly.

1.1. Prerequisites

Before continuing, you should know how to download, install and run WildFly. For more information on these steps, refer here: Getting Started Guide.

1.2. Examples in this guide

The examples in this guide are largely expressed as XML configuration file excerpts, or by using a representation of the de-typed management model.

2. Core management concepts

2.1. Operating mode

WildFly can be booted in two different modes. A managed domain allows you to run and manage a multi-server topology. Alternatively, you can run a standalone server instance.

2.1.1. Standalone Server

For many use cases, the centralized management capability available via

a managed domain is not necessary. For these use cases, a WildFly

instance can be run as a "standalone server". A standalone server

instance is an independent process, much like an JBoss Application

Server 3, 4, 5, or 6 instance is. Standalone instances can be launched

via the standalone.sh or standalone.bat launch scripts.

If more than one standalone instance is launched and multi-server management is desired, it is the user’s responsibility to coordinate management across the servers. For example, to deploy an application across all of the standalone servers, the user would need to individually deploy the application on each server.

It is perfectly possible to launch multiple standalone server instances and have them form an HA cluster, just like it was possible with JBoss Application Server 3, 4, 5 and 6.

2.1.2. Managed Domain

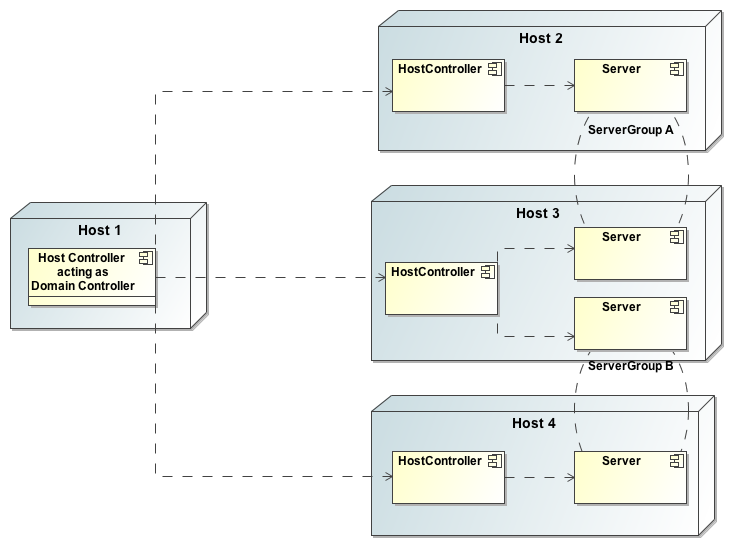

One of the primary new features of WildFly is the ability to manage multiple WildFly instances from a single control point. A collection of such servers is referred to as the members of a "domain" with a single Domain Controller process acting as the central management control point. All of the WildFly instances in the domain share a common management policy, with the Domain Controller acting to ensure that each server is configured according to that policy. Domains can span multiple physical (or virtual) machines, with all WildFly instances on a given host under the control of a special Host Controller process. One Host Controller instance is configured to act as the central Domain Controller. The Host Controller on each host interacts with the Domain Controller to control the lifecycle of the application server instances running on its host and to assist the Domain Controller in managing them.

When you launch a WildFly managed domain on a host (via the domain.sh

or domain.bat launch scripts) your intent is to launch a Host

Controller and usually at least one WildFly instance. On one of the

hosts the Host Controller should be configured to act as the Domain

Controller. See Domain Setup for details.

The following is an example managed domain topology:

Host

Each "Host" box in the above diagram represents a physical or virtual host. A physical host can contain zero, one or more server instances.

Host Controller

When the domain.sh or domain.bat script is run on a host, a process known as a Host Controller is launched. The Host Controller is solely concerned with server management; it does not itself handle application server workloads. The Host Controller is responsible for starting and stopping the individual application server processes that run on its host, and interacts with the Domain Controller to help manage them.

Each Host Controller by default reads its configuration from the

domain/configuration/host.xml file located in the unzipped WildFly

installation on its host’s filesystem. The host.xml file contains

configuration information that is specific to the particular host.

Primarily:

-

the listing of the names of the actual WildFly instances that are meant to run off of this installation.

-

configuration of how the Host Controller is to contact the Domain Controller to register itself and access the domain configuration. This may either be configuration of how to find and contact a remote Domain Controller, or a configuration telling the Host Controller to itself act as the Domain Controller.

-

configuration of items that are specific to the local physical installation. For example, named interface definitions declared in

domain.xml(see below) can be mapped to an actual machine-specific IP address inhost.xml. Abstract path names indomain.xmlcan be mapped to actual filesystem paths inhost.xml.

Domain Controller

One Host Controller instance is configured to act as the central

management point for the entire domain, i.e. to be the Domain

Controller. The primary responsibility of the Domain Controller is to

maintain the domain’s central management policy, to ensure all Host

Controllers are aware of its current contents, and to assist the Host

Controllers in ensuring any running application server instances are

configured in accordance with this policy. This central management

policy is stored by default in the domain/configuration/domain.xml

file in the unzipped WildFly installation on Domain Controller’s host’s

filesystem.

A domain.xml file must be located in the domain/configuration

directory of an installation that’s meant to run the Domain Controller.

It does not need to be present in installations that are not meant to

run a Domain Controller; i.e. those whose Host Controller is configured

to contact a remote Domain Controller. The presence of a domain.xml

file on such a server does no harm.

The domain.xml file includes, among other things, the configuration of

the various "profiles" that WildFly instances in the domain can be

configured to run. A profile configuration includes the detailed

configuration of the various subsystems that comprise that profile (e.g.

an embedded JBoss Web instance is a subsystem; a JBoss TS transaction

manager is a subsystem, etc). The domain configuration also includes the

definition of groups of sockets that those subsystems may open. The

domain configuration also includes the definition of "server groups":

Server Group

A server group is set of server instances that will be managed and configured as one. In a managed domain each application server instance is a member of a server group. (Even if the group only has a single server, the server is still a member of a group.) It is the responsibility of the Domain Controller and the Host Controllers to ensure that all servers in a server group have a consistent configuration. They should all be configured with the same profile and they should have the same deployment content deployed.

The domain can have multiple server groups. The above diagram shows two server groups, "ServerGroupA" and "ServerGroupB". Different server groups can be configured with different profiles and deployments; for example in a domain with different tiers of servers providing different services. Different server groups can also run the same profile and have the same deployments; for example to support rolling application upgrade scenarios where a complete service outage is avoided by first upgrading the application on one server group and then upgrading a second server group.

An example server group definition is as follows:

<server-group name="main-server-group" profile="default">

<socket-binding-group ref="standard-sockets"/>

<deployments>

<deployment name="foo.war_v1" runtime-name="foo.war" />

<deployment name="bar.ear" runtime-name="bar.ear" />

</deployments>

</server-group>A server-group configuration includes the following required attributes:

-

name — the name of the server group

-

profile — the name of the profile the servers in the group should run

In addition, the following optional elements are available:

-

socket-binding-group — specifies the name of the default socket binding group to use on servers in the group. Can be overridden on a per-server basis in

host.xml. If not provided in theserver-groupelement, it must be provided for each server inhost.xml. -

deployments — the deployment content that should be deployed on the servers in the group.

-

deployment-overlays — the overlays and their associated deployments.

-

system-properties — system properties that should be set on all servers in the group

-

jvm — default jvm settings for all servers in the group. The Host Controller will merge these settings with any provided in

host.xmlto derive the settings to use to launch the server’s JVM. See JVM settings for further details.

Server

Each "Server" in the above diagram represents an actual application server instance. The server runs in a separate JVM process from the Host Controller. The Host Controller is responsible for launching that process. (In a managed domain the end user cannot directly launch a server process from the command line.)

The Host Controller synthesizes the server’s configuration by combining

elements from the domain wide configuration (from domain.xml ) and the

host-specific configuration (from host.xml ).

2.1.3. Deciding between running standalone servers or a managed domain

Which use cases are appropriate for managed domain and which are appropriate for standalone servers? A managed domain is all about coordinated multi-server management — with it WildFly provides a central point through which users can manage multiple servers, with rich capabilities to keep those servers' configurations consistent and the ability to roll out configuration changes (including deployments) to the servers in a coordinated fashion.

It’s important to understand that the choice between a managed domain and standalone servers is all about how your servers are managed, not what capabilities they have to service end user requests. This distinction is particularly important when it comes to high availability clusters. It’s important to understand that HA functionality is orthogonal to running standalone servers or a managed domain. That is, a group of standalone servers can be configured to form an HA cluster. The domain and standalone modes determine how the servers are managed, not what capabilities they provide.

So, given all that:

-

A single server installation gains nothing from running in a managed domain, so running a standalone server is a better choice.

-

For multi-server production environments, the choice of running a managed domain versus standalone servers comes down to whether the user wants to use the centralized management capabilities a managed domain provides. Some enterprises have developed their own sophisticated multi-server management capabilities and are comfortable coordinating changes across a number of independent WildFly instances. For these enterprises, a multi-server architecture comprised of individual standalone servers is a good option.

-

Running a standalone server is better suited for most development scenarios. Any individual server configuration that can be achieved in a managed domain can also be achieved in a standalone server, so even if the application being developed will eventually run in production on a managed domain installation, much (probably most) development can be done using a standalone server.

-

Running a managed domain mode can be helpful in some advanced development scenarios; i.e. those involving interaction between multiple WildFly instances. Developers may find that setting up various servers as members of a domain is an efficient way to launch a multi-server cluster.

2.2. Feature stability levels

The WildFly project has high standards related to quality, stability and backwards compatibility. A key way an open source project like WildFly can ensure high standards are met is by "community bake" — allowing interested users to have access to features that are still undergoing a hardening process, while not forcing users who are not interested in such things to consume them.

To better facilitate this, WildFly 31 introduced the notion of formal "stability levels" that can be associated with functionality. When starting a WildFly process, users can use the --stability command line parameter to control the minimum stability level of available features, with a value of experimental, preview, community or default.

bin/standalone.sh --stability=previewFeatures at a lower stability level will not be available for use.

A WildFly installation will have a standard stability level, determined by the Galleon feature-pack used to provision the installation. This level is used if the --stability param is not set. For a standard WildFly installation, this level is community. For WildFly Preview it is preview.

Some details on the stability levels:

-

experimental — This level is for true bleeding edge functionality that may never advance to a higher stability level. No WildFly feature-pack or distribution zip/tar would enable this level by default.

-

preview — This is the level for features that are of a sufficient stability to be available by default in WildFly Preview, but not in standard WildFly. The general expectation for features at this level is that they will eventually move to

communitylevel in substantially similar form (although this is not guaranteed). -

community-- This is the level for features that are of a sufficient stability to be available by default in standard WildFly. Features at this level are not expected to change incompatibly over time in a manner inconsistent with the expectations of the Galleon feature-pack that provides them.

-

default — Features at this level have gone through additional vetting to ensure they are suitable for the long-term compatibility expectations of the Galleon feature-pack that provides them.

The vast majority of functionality provided in both standard WildFly and WildFly Preview is at the default stability level. Over time the amount of functionality at other levels, particularly community, is expected to increase.

|

| A feature being ‘available by default’ in a WildFly installation might not mean ‘enabled by default’, i.e. turned on in a standard out-of-the-box configuration. It could just mean a user could turn it on if they so choose using normal configuration tools like the CLI. |

2.2.1. Relationship to feature-packs

The Galleon feature-packs that WildFly produces themselves incorporate expectations for long-term feature stability and compatibility. The --stability startup setting discussed above just allows users to use a different setting than the standard one for the feature-pack.

-

wildfly-ee — This feature-pack is not widely used directly and WildFly does not produce any downloadable zip/tar built solely using it. However, it is transparently used internally in provisioning any standard WildFly installation, and most standard WildFly functionality is provisioned from this feature-pack. It can be used directly by users who wish to limit their installation to what it provides. The defining characteristic of this feature-pack is that it integrates technologies where we have the highest confidence in our ability to provide them in a largely compatible way for many years.

-

wildfly — This is the feature-pack most people use. It depends upon

wildfly-eeand adds functionality in addition to what is provisioned bywildfly-ee. The traditional standard WildFly server zip is built using this feature-pack. The primary reason things are provided in this feature-pack instead ofwildfly-eeis because the technology that is integrated is more likely to change in incompatible ways over a relatively short time period. For example, MicroProfile specifications are comfortable introducing breaking changes on an annual basis, making them a poor fit forwildfly-ee. The observability space, particularly metrics and tracing, is evolving rapidly, so our Micrometer and OpenTelemetry extensions are not inwildfly-ee. -

wildfly-preview — This feature-pack provisions WildFly Preview and is all about the fact that it provides no long term guarantees and can change significantly from release to release.

What we mean by the community and default levels is relative to the generally expected long-term maintainability and compatibility level of the feature-pack that provides it. In other words, just because a feature provided by the wildfly feature-pack has been vetted as suitable for the default level does not mean it comes with higher expectations than the feature-pack as a whole.

| WildFly Preview is also used to showcase functionality whose scope is not tied to a particular reasonably scoped ‘feature’. Using it in the past for Jakarta EE 9 was an example. Not having an embedded messaging broker in the standard configs is not a ‘feature’. |

2.3. General configuration concepts

For both a managed domain or a standalone server, a number of common configuration concepts apply:

2.3.1. Extensions

An extension is a module that extends the core capabilities of the

server. The WildFly core is very simple and lightweight; most of the

capabilities people associate with an application server are provided

via extensions. An extension is packaged as a module in the modules

folder. The user indicates that they want a particular extension to be

available by including an <extension/> element naming its module in

the domain.xml or standalone.xml file.

<extensions>

[...]

<extension module="org.jboss.as.transactions"/>

<extension module="org.jboss.as.webservices" />

<extension module="org.jboss.as.weld" />

[...]

<extension module="org.wildfly.extension.undertow"/>

</extensions>2.3.2. Profiles and Subsystems

The most significant part of the configuration in domain.xml and

standalone.xml is the configuration of one (in standalone.xml) or

more (in domain.xml) "profiles". A profile is a named set of subsystem

configurations. A subsystem is an added set of capabilities added to the

core server by an extension (see "Extensions" above). A subsystem

provides servlet handling capabilities; a subsystem provides an Jakarta Enterprise Beans

container; a subsystem provides Jakarta Transactions, etc. A profile is a named list of

subsystems, along with the details of each subsystem’s configuration. A

profile with a large number of subsystems results in a server with a

large set of capabilities. A profile with a small, focused set of

subsystems will have fewer capabilities but a smaller footprint.

The content of an individual profile configuration looks largely the

same in domain.xml and standalone.xml. The only difference is

standalone.xml is only allowed to have a single profile element (the

profile the server will run), while domain.xml can have many profiles,

each of which can be mapped to one or more groups of servers.

The contents of individual subsystem configurations look exactly the

same between domain.xml and standalone.xml.

2.3.3. Paths

A logical name for a filesystem path. The domain.xml, host.xml and

standalone.xml configurations all include a section where paths can be

declared. Other sections of the configuration can then reference those

paths by their logical name, rather than having to include the full

details of the path (which may vary on different machines). For example,

the logging subsystem configuration includes a reference to the "

`jboss.server.log.dir`" path that points to the server’s " `log`"

directory.

<file relative-to="jboss.server.log.dir" path="server.log"/>WildFly automatically provides a number of standard paths without any need for the user to configure them in a configuration file:

-

jboss.home.dir - the root directory of the WildFly distribution -

user.home- user’s home directory -

user.dir- user’s current working directory -

java.home- java installation directory -

jboss.server.base.dir- root directory for an individual server instance -

jboss.server.config.dir- directory the server will use for configuration file storage -

jboss.server.data.dir- directory the server will use for persistent data file storage -

jboss.server.log.dir- directory the server will use for log file storage -

jboss.server.temp.dir- directory the server will use for temporary file storage -

jboss.controller.temp.dir- directory the server will use for temporary file storage -

jboss.domain.servers.dir- directory under which a host controller will create the working area for individual server instances (managed domain mode only)

Users can add their own paths or override all except the first 5 of the

above by adding a <path/> element to their configuration file.

<path name="example" path="example" relative-to="jboss.server.data.dir"/>The attributes are:

-

name— the name of the path. -

path— the actual filesystem path. Treated as an absolute path, unless the 'relative-to' attribute is specified, in which case the value is treated as relative to that path. -

relative-to— (optional) the name of another previously named path, or of one of the standard paths provided by the system.

A <path/> element in a domain.xml need not include anything more

than the name attribute; i.e. it need not include any information

indicating what the actual filesystem path is:

<path name="x"/>Such a configuration simply says, "There is a path named 'x' that other

parts of the domain.xml configuration can reference. The actual

filesystem location pointed to by 'x' is host-specific and will be

specified in each machine’s host.xml file." If this approach is used,

there must be a path element in each machine’s host.xml that specifies

what the actual filesystem path is:

<path name="x" path="/var/x" />A <path/> element in a standalone.xml must include the specification

of the actual filesystem path.

2.3.4. Interfaces

A logical name for a network interface/IP address/host name to which

sockets can be bound. The domain.xml, host.xml and standalone.xml

configurations all include a section where interfaces can be declared.

Other sections of the configuration can then reference those interfaces

by their logical name, rather than having to include the full details of

the interface (which may vary on different machines). An interface

configuration includes the logical name of the interface as well as

information specifying the criteria to use for resolving the actual

physical address to use. See Interfaces

and ports for further details.

An <interface/> element in a domain.xml need not include anything

more than the name attribute; i.e. it need not include any information

indicating what the actual IP address associated with the name is:

<interface name="internal"/>Such a configuration simply says, "There is an interface named

'internal' that other parts of the domain.xml configuration can

reference. The actual IP address pointed to by 'internal' is

host-specific and will be specified in each machine’s host.xml file." If

this approach is used, there must be an interface element in each

machine’s host.xml that specifies the criteria for determining the IP

address:

<interface name="internal">

<nic name="eth1"/>

</interface>An <interface/> element in a standalone.xml must include the

criteria for determining the IP address.

See Interface declarations for full details.

2.3.5. Socket Bindings and Socket Binding Groups

A socket binding is a named configuration for a socket.

The domain.xml and standalone.xml configurations both include a

section where named socket configurations can be declared. Other

sections of the configuration can then reference those sockets by their

logical name, rather than having to include the full details of the

socket configuration (which may vary on different machines).

See Socket Binding Groups

for full details.

2.3.6. System Properties

System property values can be set in a number of places in domain.xml,

host.xml and standalone.xml. The values in standalone.xml are set

as part of the server boot process. Values in domain.xml and

host.xml are applied to servers when they are launched.

When a system property is configured in domain.xml or host.xml, the

servers it ends up being applied to depends on where it is set. Setting

a system property in a child element directly under the domain.xml

root results in the property being set on all servers. Setting it in a

<system-property/> element inside a <server-group/> element in

domain.xml results in the property being set on all servers in the

group. Setting it in a child element directly under the host.xml root

results in the property being set on all servers controlled by that

host’s Host Controller. Finally, setting it in a <system-property/>

element inside a <server/> element in host.xml result in the

property being set on that server. The same property can be configured

in multiple locations, with a value in a <server/> element taking

precedence over a value specified directly under the host.xml root

element, the value in a host.xml taking precedence over anything from

domain.xml, and a value in a <server-group/> element taking

precedence over a value specified directly under the domain.xml root

element.

2.3.7. Script Configuration Files

Scripts are located in the $JBOSS_HOME/bin directory. Within this directory you will find script configuration files

for standalone and domain startup scripts for each platform. These files can be used to configure your environment

without having to edit the scripts themselves. For example, you can configure the JAVA_OPTS environment variable to

configure the JVM before the container is launched.

Standalone Script Configuration Files:

-

standalone.confinvoked fromstandalone.sh -

standalone.conf.batinvoked fromstandalone.bat -

standalone.conf.ps1invoked fromstandalone.ps1

Domain Script Configuration Files:

-

domain.confinvoked fromdomain.sh -

domain.conf.batinvoked fromdomain.bat -

domain.conf.ps1invoked fromdomain.ps1

By default, these are in the $JBOSS_HOME/bin directory. However, you can set the STANDALONE_CONF environment

variable for standalone servers or DOMAIN_CONF environment variable for domain servers with a value of the

absolute path to the file.

Common Script Configuration Files

Starting with WildFly 23, common configuration files were introduced. These files are invoked from every script in the

$JBOSS_HOME/bin directory. While these configuration files are not present in the directory by default, they

can be added. You can simply just add the common.conf configuration file for the script type you want to

execute and all scripts in the directory will invoke the configuration script.

-

common.conffor bash scripts -

common.conf.batfor Windows batch scripts -

common.conf.ps1for PowerShell scripts

You can also set the COMMON_CONF environment variable to have this configuration script live outside the

$JBOSS_HOME/bin directory.

If you provide a common configuration file it will be invoked before the standalone and domain script

configuration files. For example invoking standalone.sh first invokes the common.conf then later invokes

the standalone.conf.

|

2.4. Management resources

When WildFly parses your configuration files at boot, or when you use one of the AS’s Management Clients you are adding, removing or modifying management resources in the AS’s internal management model. A WildFly management resource has the following characteristics:

2.4.1. Address

All WildFly management resources are organized in a tree. The path to the node in the tree for a particular resource is its address. Each segment in a resource’s address is a key/value pair:

-

The key is the resource’s type, in the context of its parent. So, for example, the root resource for a standalone server has children of type

subsystem,interface,socket-binding, etc. The resource for the subsystem that provides the AS’s webserver capability has children of typeconnectorandvirtual-server. The resource for the subsystem that provides the AS’s messaging server capability has, among others, children of typejms-queueandjms-topic. -

The value is the name of a particular resource of the given type, e.g

webormessagingfor subsystems orhttporhttpsfor web subsystem connectors.

The full address for a resource is the ordered list of key/value pairs that lead from the root of the tree to the resource. Typical notation is to separate the elements in the address with a '/' and to separate the key and the value with an '=':

-

/subsystem=undertow/server=default-server/http-listener=default -

/subsystem=messaging/jms-queue=testQueue -

/interface=public

When using the HTTP API, a '/' is used to separate the key and the value instead of an '=':

2.4.2. Operations

Querying or modifying the state of a resource is done via an operation. An operation has the following characteristics:

-

A string name

-

Zero or more named parameters. Each parameter has a string name, and a value of type

org.jboss.dmr.ModelNode(or, when invoked via the CLI, the text representation of aModelNode; when invoked via the HTTP API, the JSON representation of aModelNode.) Parameters may be optional. -

A return value, which will be of type

org.jboss.dmr.ModelNode(or, when invoked via the CLI, the text representation of aModelNode; when invoked via the HTTP API, the JSON representation of aModelNode.)

Every resource except the root resource will have an add operation and

should have a remove operation ("should" because in WildFly 31 many do

not). The parameters for the add operation vary depending on the

resource. The remove operation has no parameters.

There are also a number of "global" operations that apply to all resources. See Global operations for full details.

The operations a resource supports can themselves be determined by

invoking an operation: the read-operation-names operation. Once the

name of an operation is known, details about its parameters and return

value can be determined by invoking the read-operation-description

operation. For example, to learn the names of the operations exposed by

the root resource for a standalone server, and then learn the full

details of one of them, via the CLI one would:

[standalone@localhost:9990 /] :read-operation-names

{

"outcome" => "success",

"result" => [

"add-namespace",

"add-schema-location",

"delete-snapshot",

"full-replace-deployment",

"list-snapshots",

"read-attribute",

"read-children-names",

"read-children-resources",

"read-children-types",

"read-config-as-xml",

"read-operation-description",

"read-operation-names",

"read-resource",

"read-resource-description",

"reload",

"remove-namespace",

"remove-schema-location",

"replace-deployment",

"shutdown",

"take-snapshot",

"upload-deployment-bytes",

"upload-deployment-stream",

"upload-deployment-url",

"validate-address",

"write-attribute"

]

}

[standalone@localhost:9990 /] :read-operation-description(name=upload-deployment-url)

{

"outcome" => "success",

"result" => {

"operation-name" => "upload-deployment-url",

"description" => "Indicates that the deployment content available at the included URL should be added to the deployment content repository. Note that this operation does not indicate the content should be deployed into the runtime.",

"request-properties" => {"url" => {

"type" => STRING,

"description" => "The URL at which the deployment content is available for upload to the domain's or standalone server's deployment content repository.. Note that the URL must be accessible from the target of the operation (i.e. the Domain Controller or standalone server).",

"required" => true,

"min-length" => 1,

"nillable" => false

}},

"reply-properties" => {

"type" => BYTES,

"description" => "The hash of managed deployment content that has been uploaded to the domain's or standalone server's deployment content repository.",

"min-length" => 20,

"max-length" => 20,

"nillable" => false

}

}

}See Descriptions below for more on how to learn about the operations a resource exposes.

2.4.3. Attributes

Management resources expose information about their state as attributes.

Attributes have string name, and a value of type

org.jboss.dmr.ModelNode (or: for the CLI, the text representation of a

ModelNode; for HTTP API, the JSON representation of a ModelNode.)

Attributes can either be read-only or read-write. Reading and writing

attribute values is done via the global read-attribute and

write-attribute operations.

The read-attribute operation takes a single parameter "name" whose

value is a the name of the attribute. For example, to read the "port"

attribute of a socket-binding resource via the CLI:

[standalone@localhost:9990 /] /socket-binding-group=standard-sockets/socket-binding=https:read-attribute(name=port)

{

"outcome" => "success",

"result" => 8443

}If an attribute is writable, the write-attribute operation is used to

mutate its state. The operation takes two parameters:

-

name– the name of the attribute -

value– the value of the attribute

For example, to read the "port" attribute of a socket-binding resource via the CLI:

[standalone@localhost:9990 /] /socket-binding-group=standard-sockets/socket-binding=https:write-attribute(name=port,value=8444)

{"outcome" => "success"}Attributes can have one of two possible storage types:

-

CONFIGURATION – means the value of the attribute is stored in the persistent configuration; i.e. in the

domain.xml,host.xmlorstandalone.xmlfile from which the resource’s configuration was read. -

RUNTIME – the attribute value is only available from a running server; the value is not stored in the persistent configuration. A metric (e.g. number of requests serviced) is a typical example of a RUNTIME attribute.

The values of all of the attributes a resource exposes can be obtained

via the read-resource operation, with the "include-runtime" parameter

set to "true". For example, from the CLI:

[standalone@localhost:9990 /] /subsystem=undertow/server=default-server/http-listener=default:read-resource(include-runtime=true)

{

"outcome" => "success",

"result" => {

"allow-encoded-slash" => false,

"allow-equals-in-cookie-value" => false,

"always-set-keep-alive" => true,

"buffer-pipelined-data" => true,

"buffer-pool" => "default",

"bytes-received" => 0L,

"bytes-sent" => 0L,

"certificate-forwarding" => false,

"decode-url" => true,

"disallowed-methods" => ["TRACE"],

"enable-http2" => false,

"enabled" => true,

"error-count" => 0L,

"max-buffered-request-size" => 16384,

"max-connections" => undefined,

"max-cookies" => 200,

"max-header-size" => 1048576,

"max-headers" => 200,

"max-parameters" => 1000,

"max-post-size" => 10485760L,

"max-processing-time" => 0L,

"no-request-timeout" => undefined,

"processing-time" => 0L,

"proxy-address-forwarding" => false,

"read-timeout" => undefined,

"receive-buffer" => undefined,

"record-request-start-time" => false,

"redirect-socket" => "https",

"request-count" => 0L,

"request-parse-timeout" => undefined,

"resolve-peer-address" => false,

"send-buffer" => undefined,

"socket-binding" => "http",

"tcp-backlog" => undefined,

"tcp-keep-alive" => undefined,

"url-charset" => "UTF-8",

"worker" => "default",

"write-timeout" => undefined

}

}Omit the "include-runtime" parameter (or set it to "false") to limit output to those attributes whose values are stored in the persistent configuration:

[standalone@localhost:9990 /] /subsystem=undertow/server=default-server/http-listener=default:read-resource(include-runtime=false)

{

"outcome" => "success",

"result" => {

"allow-encoded-slash" => false,

"allow-equals-in-cookie-value" => false,

"always-set-keep-alive" => true,

"buffer-pipelined-data" => true,

"buffer-pool" => "default",

"certificate-forwarding" => false,

"decode-url" => true,

"disallowed-methods" => ["TRACE"],

"enable-http2" => false,

"enabled" => true,

"max-buffered-request-size" => 16384,

"max-connections" => undefined,

"max-cookies" => 200,

"max-header-size" => 1048576,

"max-headers" => 200,

"max-parameters" => 1000,

"max-post-size" => 10485760L,

"no-request-timeout" => undefined,

"proxy-address-forwarding" => false,

"read-timeout" => undefined,

"receive-buffer" => undefined,

"record-request-start-time" => false,

"redirect-socket" => "https",

"request-parse-timeout" => undefined,

"resolve-peer-address" => false,

"send-buffer" => undefined,

"socket-binding" => "http",

"tcp-backlog" => undefined,

"tcp-keep-alive" => undefined,

"url-charset" => "UTF-8",

"worker" => "default",

"write-timeout" => undefined

}

}See Descriptions below for how to learn more about the attributes a particular resource exposes.

Override an Attribute Value with an Environment Variable

It is possible to override the value of any simple attribute by providing an environment variable with a name that maps to the attribute (and its resource).

|

Complex attributes (which have their |

If there is an environment variable with such a name, the management resource will use the value of this environment variable when the management resource validates and sets the attribute value. This takes place before the attribute value is resolved (if it contains an expression) or corrected.

|

This feature is disabled by default. To enable it, the environment variable

|

Mapping between the resource address and attribute and the environment variable

The name of the environment variable is based on the address of the resource and the name of the attribute:

-

take the address of the resource (e.g.

/subsystem=undertow/server=default-server/http-listener=default)-

/subsystem=undertow/server=default-server/http-listener=default

-

-

remove the leading slash (

/)-

subsystem=undertow/server=default-server/http-listener=default

-

-

append two underscores (

__) and the name of the attribute (e.g.proxy-address-forwarding)-

subsystem=undertow/server=default-server/http-listener=default__proxy-address-forwarding

-

-

Replace all non-alphanumeric characters with an underscore (

_) and put it in upper case-

SUBSYSTEM_UNDERTOW_SERVER_DEFAULT_SERVER_HTTP_LISTENER_DEFAULT__PROXY_ADDRESS_FORWARDING

-

If WildFly is started with that environment variable, the value of the proxy-address-forwarding attribute on the

/subsystem=undertow/server=default-server/http-listener=default will be set to the value of the environment variable:

$ WILDFLY_OVERRIDING_ENV_VARS=1 \

SUBSYSTEM_UNDERTOW_SERVER_DEFAULT_SERVER_HTTP_LISTENER_DEFAULT__PROXY_ADDRESS_FORWARDING=false \

./bin/standalone.sh

$ ./bin/jboss-cli.sh -c --command="/subsystem=undertow/server=default-server/http-listener=default:read-attribute(name=proxy-address-forwarding)"

{

"outcome" => "success",

"result" => "false"|

If an attribute value is determined from an environment variable, the next time the configuration is persisted, that value from the environment variable will be persisted. Until an operation triggers such persistence of the configuration file, the configuration file will not reflect the current running configuration. |

2.4.4. Children

Management resources may support child resources. The

types of children a

resource supports (e.g. connector for the web subsystem resource) can

be obtained by querying the resource’s description (see

Descriptions below)

or by invoking the read-children-types operation. Once you know the

legal child types, you can query the names of all children of a given

type by using the global read-children-types operation. The operation

takes a single parameter "child-type" whose value is the type. For

example, a resource representing a socket binding group has children. To

find the type of those children and the names of resources of that type

via the CLI one could:

[standalone@localhost:9990 /] /socket-binding-group=standard-sockets:read-children-types

{

"outcome" => "success",

"result" => ["socket-binding"]

}

[standalone@localhost:9990 /] /socket-binding-group=standard-sockets:read-children-names(child-type=socket-binding)

{

"outcome" => "success",

"result" => [

"http",

"https",

"jmx-connector-registry",

"jmx-connector-server",

"jndi",

"remoting",

"txn-recovery-environment",

"txn-status-manager"

]

}2.4.5. Descriptions

All resources expose metadata that describes their attributes,

operations and child types. This metadata is itself obtained by invoking

one or more of the global operations each

resource supports. We showed examples of the read-operation-names,

read-operation-description, read-children-types and

read-children-names operations above.

The read-resource-description operation can be used to find the

details of the attributes and child types associated with a resource.

For example, using the CLI:

[standalone@localhost:9990 /] /socket-binding-group=standard-sockets:read-resource-description

{

"outcome" => "success",

"result" => {

"description" => "Contains a list of socket configurations.",

"head-comment-allowed" => true,

"tail-comment-allowed" => false,

"attributes" => {

"name" => {

"type" => STRING,

"description" => "The name of the socket binding group.",

"required" => true,

"head-comment-allowed" => false,

"tail-comment-allowed" => false,

"access-type" => "read-only",

"storage" => "configuration"

},

"default-interface" => {

"type" => STRING,

"description" => "Name of an interface that should be used as the interface for any sockets that do not explicitly declare one.",

"required" => true,

"head-comment-allowed" => false,

"tail-comment-allowed" => false,

"access-type" => "read-write",

"storage" => "configuration"

},

"port-offset" => {

"type" => INT,

"description" => "Increment to apply to the base port values defined in the socket bindings to derive the runtime values to use on this server.",

"required" => false,

"head-comment-allowed" => true,

"tail-comment-allowed" => false,

"access-type" => "read-write",

"storage" => "configuration"

}

},

"operations" => {},

"children" => {"socket-binding" => {

"description" => "The individual socket configurtions.",

"min-occurs" => 0,

"model-description" => undefined

}}

}

}Note the

"operations" ⇒ }} in the output above. If the command had included the {{operations

parameter (i.e.

/socket-binding-group=standard-sockets:read-resource-description(operations=true))

the output would have included the description of each operation

supported by the resource.

See the Global operations section for

details on other parameters supported by the read-resource-description

operation and all the other globally available operations.

2.4.6. Comparison to JMX MBeans

WildFly management resources are conceptually quite similar to Open MBeans. They have the following primary differences:

-

WildFly management resources are organized in a tree structure. The order of the key value pairs in a resource’s address is significant, as it defines the resource’s position in the tree. The order of the key properties in a JMX

ObjectNameis not significant. -

In an Open MBean attribute values, operation parameter values and operation return values must either be one of the simple JDK types (String, Boolean, Integer, etc) or implement either the

javax.management.openmbean.CompositeDatainterface or thejavax.management.openmbean.TabularDatainterface. WildFly management resource attribute values, operation parameter values and operation return values are all of typeorg.jboss.dmr.ModelNode.

2.4.7. Basic structure of the management resource trees

As noted above, management resources are organized in a tree structure. The structure of the tree depends on whether you are running a standalone server or a managed domain.

Standalone server

The structure of the managed resource tree is quite close to the

structure of the standalone.xml configuration file.

-

The root resource

-

extension– extensions installed in the server -

path– paths available on the server -

system-property– system properties set as part of the configuration (i.e. not on the command line) -

core-service=management– the server’s core management services -

core-service=service-container– resource for the JBoss MSCServiceContainerthat’s at the heart of the AS -

subsystem– the subsystems installed on the server. The bulk of the management model will be children of typesubsystem -

interface– interface configurations -

socket-binding-group– the central resource for the server’s socket bindings-

socket-binding– individual socket binding configurations

-

-

deployment– available deployments on the server

-

Managed domain

In a managed domain, the structure of the managed resource tree spans

the entire domain, covering both the domain wide configuration (e.g.

what’s in domain.xml, the host specific configuration for each host

(e.g. what’s in host.xml, and the resources exposed by each running

application server. The Host Controller processes in a managed domain

provide access to all or part of the overall resource tree. How much is

available depends on whether the management client is interacting with

the Host Controller that is acting as the Domain Controller. If

the Host Controller is the Domain Controller, then the section of

the tree for each host is available. If the Host Controller is a secondary Host Controller

to a remote Domain Controller, then only the portion of the tree

associated with that host is available.

-

The root resource for the entire domain. The persistent configuration associated with this resource and its children, except for those of type

host, is persisted in thedomain.xmlfile on the Domain Controller.-

extension– extensions available in the domain -

path– paths available on across the domain -

system-property– system properties set as part of the configuration (i.e. not on the command line) and available across the domain -

profile– sets of subsystem configurations that can be assigned to server groups-

subsystem– configuration of subsystems that are part of the profile

-

-

interface– interface configurations -

socket-binding-group– sets of socket bindings configurations that can be applied to server groups-

socket-binding– individual socket binding configurations

-

-

deployment– deployments available for assignment to server groups -

deployment-overlay — deployment-overlays content available to overlay deployments in server groups

-

server-group– server group configurations -

host – the individual Host Controllers. Each child of this type represents the root resource for a particular host. The persistent configuration associated with one of these resources or its children is persisted in the host’s

host.xmlfile.-

path– paths available on each server on the host -

system-property– system properties to set on each server on the host -

core-service=management– the Host Controller’s core management services -

interface– interface configurations that apply to the Host Controller or servers on the host -

jvm– JVM configurations that can be applied when launching servers -

server-config– configuration describing how the Host Controller should launch a server; what server group configuration to use, and any server-specific overrides of items specified in other resources -

server– the root resource for a running server. Resources from here and below are not directly persisted; the domain-wide and host level resources contain the persistent configuration that drives a server-

extension– extensions installed in the server -

path– paths available on the server -

system-property– system properties set as part of the configuration (i.e. not on the command line) -

core-service=management– the server’s core management services -

core-service=service-container– resource for the JBoss MSCServiceContainerthat’s at the heart of the AS -

subsystem– the subsystems installed on the server. The bulk of the management model will be children of typesubsystem -

interface– interface configurations -

socket-binding-group– the central resource for the server’s socket bindings-

socket-binding– individual socket binding configurations

-

-

deployment– available deployments on the server -

deployment-overlay — available overlays on the server

-

-

-

3. Management Clients

WildFly offers three different approaches to configure and manage servers: a web interface, a command line client and a set of XML configuration files. Regardless of the approach you choose, the configuration is always synchronized across the different views and finally persisted to the XML files.

3.1. Web Management Interface

The web interface is a GWT application that uses the HTTP management API to configure a management domain or standalone server.

3.1.1. HTTP Management Endpoint

The HTTP API endpoint is the entry point for management clients that rely on the HTTP protocol to integrate with the management layer. It uses a JSON encoded protocol and a de-typed, RPC style API to describe and execute management operations against a managed domain or standalone server. It’s used by the web console, but offers integration capabilities for a wide range of other clients too.

The HTTP API endpoint is co-located with either the domain controller or a standalone server. By default, it runs on port 9990:

<management-interfaces>

[...]

<http-interface http-authentication-factory="management-http-authentication">

<http-upgrade enabled="true" sasl-authentication-factory="management-sasl-authentication"/>

<socket-binding http="management-http"/>

</http-interface>

<management-interfaces>~(See standalone/configuration/standalone.xml or domain/configuration/host.xml)~

The HTTP API Endpoint serves two different contexts. One for executing management operations and another one that allows you to access the web interface:

-

Domain API: http://<host>:9990/management

-

Web Console: http://<host>:9990/console

3.1.2. Accessing the web console

The web console is served through the same port as the HTTP management API. It can be accessed by pointing your browser to:

Default URL

| By default the web interface can be accessed here: http://localhost:9990/console. |

3.1.3. Custom HTTP Headers

For the responses returned from the HTTP management interface it is also possible to define custom constant HTTP headers that will be added to any response based on matching a configured prefix against the request path.

As an example it could be desirable to add a HTTP header X-Help which points users to the correct location to obtain assistance. The following management operation can be executed within the CLI to activate returning this header on all requests.

[standalone@localhost:9990 /] /core-service=management/management-interface=http-interface: \

write-attribute(name=constant-headers, value=[{path="/", \

headers=[{name="X-Help", value="wildfly.org"}]}])The responses to all requests to the HTTP management interface will now include the header X-Help with the value wildfly.org.

The resulting configuration will look like: -

<management-interfaces>

<http-interface http-authentication-factory="management-http-authentication">

<http-upgrade enabled="true" sasl-authentication-factory="management-sasl-authentication"/>

<socket-binding http="management-http"/>

<constant-headers>

<header-mapping path="/">

<header name="X-Help" value="wildfly.org"/>

</header-mapping>

</constant-headers>

</http-interface>

</management-interfaces>The example here has illustrated adding a single header for all requests matching the path prefix / i.e. every request. More advanced mappings can be defined by specifying a mapping for a more specific path prefix such as /management.

If a request matches multiple mappings such as a request to /management where mappings for / and /management have been specified the headers from all of the mappings will be applied to the corresponding request.

Within a single mapping it is also possible to define multiple headers which should be set on the corresponding response.

As the constant-headers attribute is set verification will be performed to verify that the HTTP headers specified are only making use of allowed characters as specified in the HTTP specification RFCs.

Additionally as they have special handling within the management interface overriding the following headers is disallowed and attempts to set these will result in an error being reported.

-

Connection -

Content-Length -

Content-Type -

Date -

Transfer-Encoding

The configured headers are set at the very end of processing the request immediately before the response is returned to the client, this will mean any of the configured headers will override the same headers set by the corresponding endpoint.

3.2. Command Line Interface

The Command Line Interface (CLI) is a management tool for a managed domain or standalone server. It allows a user to connect to the domain controller or a standalone server and execute management operations available through the de-typed management model.

3.2.1. Running the CLI

Depending on the operating system, the CLI is launched using

jboss-cli.sh or jboss-cli.bat located in the WildFly bin

directory. For further information on the default directory structure,

please consult the " Getting Started

Guide".

The first thing to do after the CLI has started is to connect to a managed WildFly instance. This is done using the command connect, e.g.

./bin/jboss-cli.sh

You are disconnected at the moment. Type 'connect' to connect to the server

or 'help' for the list of supported commands.

[disconnected /]

[disconnected /] connect

[domain@localhost:9990 /]

[domain@localhost:9990 /] quit

Closed connection to localhost:9990localhost:9990 is the default host and port combination for the

WildFly CLI client.

The host and the port of the server can be provided as an optional parameter, if the server is not listening on localhost:9990.

./bin/jboss-cli.sh

You are disconnected at the moment. Type 'connect' to connect to the server

[disconnected /] connect 192.168.0.10:9990

Connected to standalone controller at 192.168.0.1:9990The :9990 is not required as the CLI will use port 9990 by default. The port needs to be provided if the server is listening on some other port.

To terminate the session type quit.

| The jboss-cli script accepts a --connect parameter: ./jboss-cli.sh --connect |

The --controller parameter can be used to specify the host and port of the server: ./jboss-cli.sh --connect --controller=192.168.0.1:9990

Help is also available:

In order to list the set of commands that are currently available in the current context use the option --commands (NB: the following examples are not displaying an exhaustive set of CLI commands, more and/or different commands could be available in your running CLI instance):

[domain@localhost:9990 /] help --commands

Commands available in the current context:

batch connection-factory deployment-overlay if patch reload try

cd connection-info echo jdbc-driver-info pwd rollout-plan undeploy

clear data-source echo-dmr jms-queue quit run-batch unset

command deploy help jms-topic read-attribute set version

connect deployment-info history ls read-operation shutdown xa-data-source

To read a description of a specific command execute 'help <command name>'.The help command can print help for any command or operation. For operations, the operation description is formatted as a command help (synopsis, description and options). Some commands (eg: patch) expose two levels of documentation. A high level description for the command itself and a dedicated help content for each action (eg: apply). The help documentation of each command makes it clear if this two levels are available or not.

Use Tab-completion to discover the set of commands and operations:

help <TAB>The list of all commands (enabled or not) is displayed.

Examples

-

Display the help of the patch command:

help patch-

Display the help of the apply action of the patch command:

help patch apply-

Display the description of the elytron key-store resource add operation formatted as a command help content:

help /subsystem=elytron/key-store=?:add3.2.2. Keyboard navigation

In order to efficiently edit commands, the CLI allows you to navigate the words and characters of a command using the keyboard.

NB: Part of this navigation is platform dependent.

Go left (back) one word

-

Alt+B : Linux, Solaris, HP-UX, Windows.

-

Ctrl+LeftArrow: Linux, Solaris, HP-UX.

-

Alt+LeftArrow: Mac OSX.

Go right (forward) one word

-

Alt+F : Linux, Solaris, HP-UX, Windows.

-

Ctrl+RightArrow: Linux, Solaris, HP-UX.

-

Alt+RightArrow: Mac OSX.

Go to the beginning of the line

-

Ctrl+A: All supported platforms.

-

HOME: Linux, Solaris, HP-UX, Windows

Go to the end of the line

-

Ctrl+E: All supported platforms.

-

END: Linux, Solaris, HP-UX, Windows

Go left (back) one character

-

Ctrl+B or LeftArrow: All supported platforms.

Go right (forward) one character

-

Ctrl+F or RightArrow: All supported platforms.

3.2.3. Non-interactive Mode

The CLI can also be run in non-interactive mode to support scripts and other types of command line or batch processing. The --command and --commands arguments can be used to pass a command or a list of commands to execute. Additionally a --file argument is supported which enables CLI commands to be provided from a text file.

For example the following command can be used to list all the current deployments

$ ./bin/jboss-cli.sh --connect --commands=ls\ deployment

sample.war

business.jarThe output can be combined with other shell commands for further processing, for example to find out what .war files are deployed:

$ ./bin/jboss-cli.sh --connect --commands=ls\ deployment | grep war

sample.warIn order to match a command with its output, you can provide the option

--echo-command (or add the XML element <echo-command> to the CLI

configuration file) in order to make the CLI to include the prompt

command + options in the output. With this option enabled, any executed

command will be added to the output.

3.2.4. Command timeout

By default CLI command and operation executions are not timely bounded. It means that a command never ending its execution will make the CLI process to be stuck and unresponsive. To protect the CLI from this behavior, one can set a command execution timeout.

Command Timeout behavior

In interactive mode, when a timeout occurs, an error message is displayed then the console prompt is made available to type new commands. In non interactive mode (executing a script or a list of commands), when a timeout occurs, an exception is thrown and the CLI execution is stopped. In both modes (interactive and non interactive), when a timeout occurs, the CLI will make a best effort to cancel the associated server side activities.

Configuring the Command timeout

-

Add the XML element <command-timeout>\{num seconds}</command-timeout> to the CLI XML configuration file.

-

Add the option - -command-timeout=\{num seconds} to the CLI command line. This will override any value set in the XML configuration file.

Managing the Command Timeout

Once the CLI is running, the timeout can be adjusted to cope with the commands to execute. For example a batch command will need a longer timeout than a non batch one. The command command-timeout allows to get, set and reset the command timeout.

Retrieving the command timeout

The command command-timeout get displays the current timeout in seconds. A timeout of 0 means no timeout.

[standalone@localhost:9990 /] command-timeout get

0Setting the command timeout

The command command-timeout set update the timeout value to a number of seconds. If a timeout has been set via configuration (XML file or option), it is overridden by the set action.

[standalone@localhost:9990 /] command-timeout set 10Resetting the command timeout

The command command-timeout reset \{config|default} allows to set the timeout to its configuration value (XML file or option) or default value (0 second). If no configuration value is set, resetting to the configuration value sets the timeout to its default value (0 seconds).

[standalone@localhost:9990 /] command-timeout reset config

[standalone@localhost:9990 /] command-timeout reset default3.2.5. Default Native Management Interface Security

The native interface shares the same security configuration as the http interface, however we also support a local authentication mechanism which means that the CLI can authenticate against the local WildFly instance without prompting the user for a username and password. This mechanism only works if the user running the CLI has read access to the standalone/tmp/auth folder or domain/tmp/auth folder under the respective WildFly installation - if the local mechanism fails then the CLI will fallback to prompting for a username and password for a user configured as in Default HTTP Interface Security.

Establishing a CLI connection to a remote server will require a username and password by default.

3.2.6. Operation Requests

Operation requests allow for low level interaction with the management model. They are different from the high level commands (i.e. create-jms-queue) in that they allow you to read and modify the server configuration as if you were editing the XML configuration files directly. The configuration is represented as a tree of addressable resources, where each node in the tree (aka resource) offers a set of operations to execute.

An operation request basically consists of three parts: The address, an operation name and an optional set of parameters.

The formal specification for an operation request is:

[/node-type=node-name (/node-type=node-name)*] : operation-name [( [parameter-name=parameter-value (,parameter-name=parameter-value)*] )]

For example:

/subsystem=logging/root-logger=ROOT:change-root-log-level(level=WARN)Tab Completion

Tab-completion is supported for all commands and options, i.e. node-types and node-names, operation names and parameter names.

In operation Tab-completion, required parameters have a name terminated by the '*' character. This helps identify which are the parameters that must be set in order to construct a valid operation. Furthermore, Tab-completion does not propose parameters that are alternatives of parameters already present in the operation.

For example:

/deployment=myapp:add(<TAB>

! content* enabled runtime-nameThe parameter content is required and completion advertises it with a '*' character.

/deployment=myapp:add-content(content=[{<TAB>

bytes* hash* input-stream-index* target-path* url*bytes, hash, input-stream-index and url are required but also alternatives (only one of them can be set). As soon as one of these parameter has been set, the others are no longer proposed by completion.

/deployment=myapp:add-content(content=[{url=myurl,<TAB>

/deployment=myapp:add-content(content=[{url=myurl,target-pathtarget-path argument is automatically inlined in the command.

| We are also considering adding aliases that are less verbose for the user, and will translate into the corresponding operation requests in the background. |

Whitespaces between the separators in the operation request strings are not significant.

Addressing resources

Operation requests might not always have the address part or the parameters. E.g.

:read-resourcewhich will list all the node types for the current node.

To syntactically disambiguate between the commands and operations, operations require one of the following prefixes:

To execute an operation against the current node, e.g.

cd subsystem=logging

:read-resource(recursive="true")To execute an operation against a child node of the current node, e.g.

cd subsystem=logging

./root-logger=ROOT:change-root-log-level(level=WARN)To execute an operation against the root node, e.g.

/:read-resourceAvailable Operation Types and Descriptions

The operation types can be distinguished between common operations that exist on any node and specific operations that belong to a particular configuration resource (i.e. subsystem). The common operations are:

-

add

-

read-attribute

-

read-children-names

-

read-children-resources

-

read-children-types

-

read-operation-description

-

read-operation-names

-

read-resource

-

read-resource-description

-

remove

-

validate-address

-

write-attribute

For a list of specific operations (e.g. operations that relate to the logging subsystem) you can always query the model itself. For example, to read the operations supported by the logging subsystem resource on a standalone server:

[[standalone@localhost:9990 /] /subsystem=logging:read-operation-names

{

"outcome" => "success",

"result" => [

"add",

"change-root-log-level",

"read-attribute",

"read-children-names",

"read-children-resources",

"read-children-types",

"read-operation-description",

"read-operation-names",

"read-resource",

"read-resource-description",

"remove-root-logger",

"root-logger-assign-handler",

"root-logger-unassign-handler",

"set-root-logger",

"validate-address",

"write-attribute"

]

}As you can see, the logging resource offers four additional operations, namely root-logger-assign-handler, root-logger-unassign-handler, set-root-logger and remove-root-logger.

Further documentation about a resource or operation can be retrieved through the description:

[standalone@localhost:9990 /] /subsystem=logging:read-operation-description(name=change-root-log-level)

{

"outcome" => "success",

"result" => {

"operation-name" => "change-root-log-level",

"description" => "Change the root logger level.",

"request-properties" => {"level" => {

"type" => STRING,

"description" => "The log level specifying which message levels will be logged by this logger.

Message levels lower than this value will be discarded.",

"required" => true

}}

}

}Full model

To see the full model enter :read-resource(recursive=true).

|

3.2.7. Command History

Command (and operation request) history is enabled by default. The history is kept both in-memory and in a file on the disk, i.e. it is preserved between command line sessions. The history file name is .jboss-cli-history and is automatically created in the user’s home directory. When the command line interface is launched this file is read and the in-memory history is initialized with its content.

| While in the command line session, you can use the arrow keys to go back and forth in the history of commands and operations. |

To manipulate the history you can use the history command. If executed without any arguments, it will print all the recorded commands and operations (up to the configured maximum, which defaults to 500) from the in-memory history.

history supports three optional arguments:

-

disable - will disable history expansion (but will not clear the previously recorded history);

-

enabled - will re-enable history expansion (starting from the last recorded command before the history expansion was disabled);

-

clear - will clear the in-memory history (but not the file one).

3.2.8. JSON and DMR output

By default the CLI prints operation results using the DMR textual syntax. There are two ways to make the CLI to display JSON:

-

--output-json option when launching the CLI.

-

<output-json> XML element added to jboss-cli.xml configuration file.

3.2.9. Color output

The CLI outputs results of commands and the prompt in color. To disable this, there are two possible ways to disable it:

-

--no-color-output will disable color output;

-

Change <enabled> to

falseinjboss-cli.xml.

The <color-output> block is used to configure the colors of the six basic elements that do support it

-

Output messages: error, warning and success;

-

Required configuration options when using the auto-complete functionality;

-

The color of the default prompt;

-

The color of the prompt when using

batchand any of the workflow commands,if,forandtry.

<color-output>

<enabled>true</enabled>

<error-color>red</error-color>

<warn-color>yellow</warn-color>

<success-color>default</success-color>

<required-color>magenta</required-color>

<workflow-color>green</workflow-color>

<prompt-color>blue</prompt-color>

</color-output>There are eight available colors:

Black |

Magenta |

Blue |

Red |

Cyan |

White |

Green |

Yellow |

There is also the possibility of using the default color, which is the terminal’s configured foreground color.

3.2.10. Paging and searching output

In interactive mode, when the content to display is longer than the terminal height, the content is paged. You can navigate the content by using the following keys and mouse events:

-

space or PAGE_DOWN: scroll the content one page down.

-

'\' or PAGE_UP: scroll the content one page up.

-

';' or up arrow or mouse wheel up: scroll the content one line up.

-

ENTER or down arrow or mouse wheel down: scroll the content one line down.

-

HOME or 'g': scroll to the top of the content. NB: HOME is only supported for keyboards containing this key.

-

END or 'G': scroll to the bottom of the content. NB: END is only supported for keyboards containing this key.

-

'q' or 'Q' or ESC: exit the paging.

NB: When the end of the content is reached (using ENTER, space, …) the paging is automatically exited.

It is possible to search for text when the content is paged. Search is operated with the following keys:

-

'/' to display prompt allowing to type some text. Type return to launch the search.

You can use up/down arrows to retrieve previously typed text. NB: search history is not persisted when CLI process exits. -

'n' to jump to the next match if any. If no search text has been typed, then the last entry from the search history is used.

-

'N' to jump to the previous match if any. If no search text has been typed, then the last entry from the search history is used.

There are two possible ways to disable the output paging and write the whole output of the commands at once:

-

--no-output-paging command line parameter will disable the output paging;

-

Add <output-paging>false<output-paging> in

jboss-cli.xml.

| On Windows, searching and navigating backward is only supported starting with Windows 10 and Windows Server 2016. |

| If the CLI process is sent the signal KILL(9) while it is paging, the terminal will stay in alternate mode. This makes the terminal to behave in an unexpected manner (display and mouse events). In order to restore the terminal state call: tput rmcup. |

3.2.11. Batch Processing

The batch mode allows one to group commands and operations and execute them together as an atomic unit. If at least one of the commands or operations fails, all the other successfully executed commands and operations in the batch are rolled back.

Not all of the commands are allowed in the batch. For example, commands like cd, ls, help, etc. are not allowed in the batch since they don’t translate into operation requests. Only the commands that translate into operation requests are allowed in the batch. The batch, actually, is executed as a composite operation request.

The batch mode is entered by executing command batch.

[standalone@localhost:9990 /] batch [standalone@localhost:9990 / #] /subsystem=datasources/data-source="java\:\/H2DS":enable [standalone@localhost:9990 / #] /subsystem=messaging-activemq/server=default/jms-queue=newQueue:add

You can execute a batch using the run-batch command:

[standalone@localhost:9990 / #] run-batch

The batch executed successfully.Exit the batch edit mode without losing your changes:

[standalone@localhost:9990 / #] holdback-batch

[standalone@localhost:9990 /]Then activate it later on again:

[standalone@localhost:9990 /] batch

Re-activated batch

#1 /subsystem=datasources/data-source=java:/H2DS:\/H2DS:enableThere are several other notable batch commands available as well (tab complete to see the list):

-

clear-batch

-

edit-batch-line (e.g. edit-batch line 3 create-jms-topic name=mytopic)

-

remove-batch-line (e.g. remove-batch-line 3)

-

move-batch-line (e.g. move-batch-line 3 1)

-

discard-batch

3.2.12. Operators

CLI has some operators that are similar to shell operators:

-

> To redirect the output of a command/operation to a file:

:read-resource > my-file.txt

-

>> To redirect the output of a command/operation and append it at the end of a file:

:read-resource >> my-file.txt

-

| To redirect the output of a command/operation to the grep command:

:read-resource | grep undefined

3.3. Default HTTP Interface Security

WildFly is distributed secured by default. The default security mechanism is username / password based making use of HTTP Digest for the authentication process.

The reason for securing the server by default is so that if the management interfaces are accidentally exposed on a public IP address authentication is required to connect - for this reason there is no default user in the distribution.

The user are stored in a properties file called mgmt-users.properties under standalone/configuration and domain/configuration depending on the running mode of the server, these files contain the users username along with a pre-prepared hash of the username along with the name of the realm and the users password.

| Although the properties files do not contain the plain text passwords they should still be guarded as the pre-prepared hashes could be used to gain access to any server with the same realm if the same user has used the same password. |

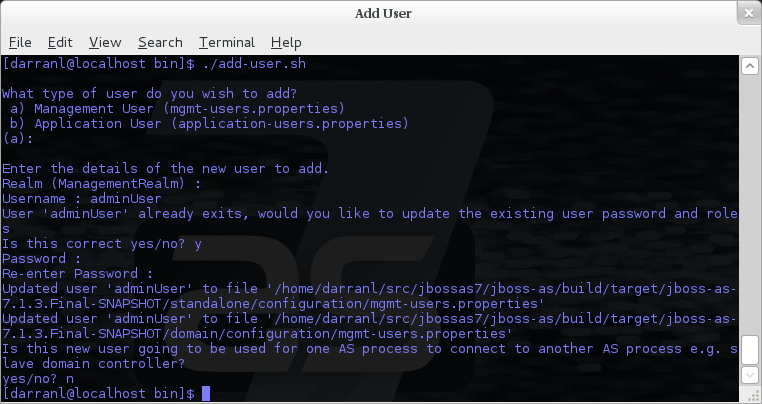

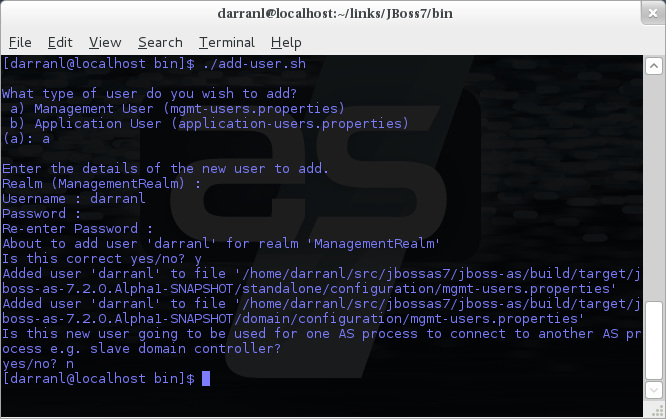

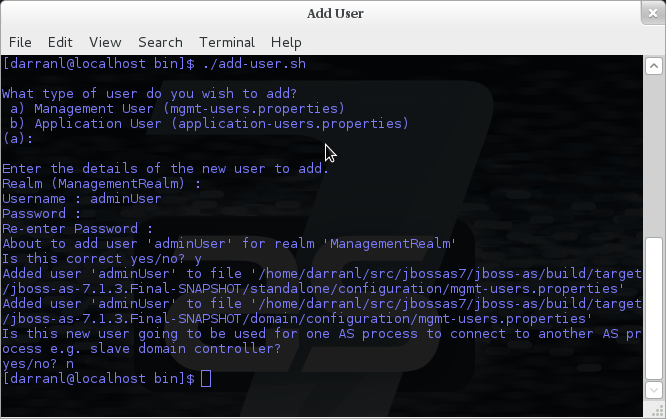

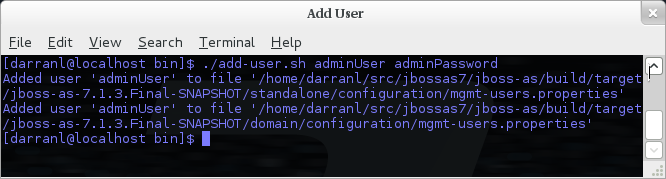

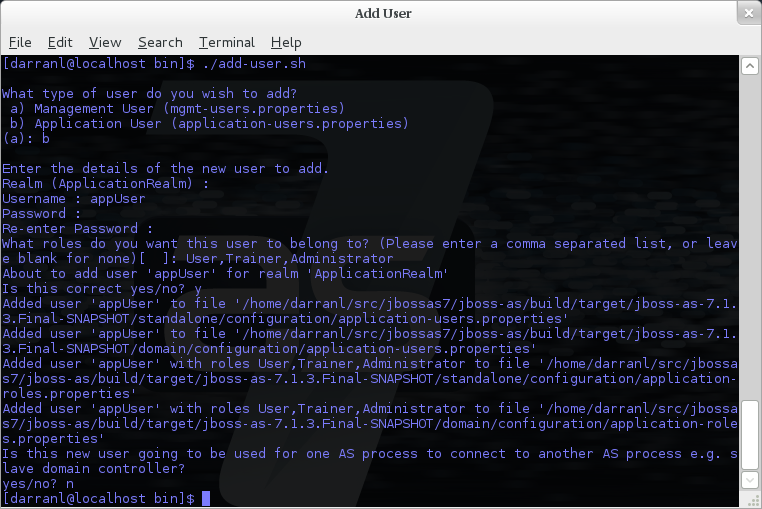

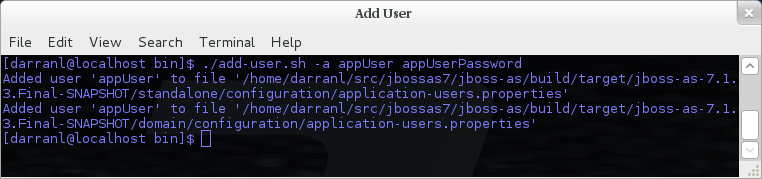

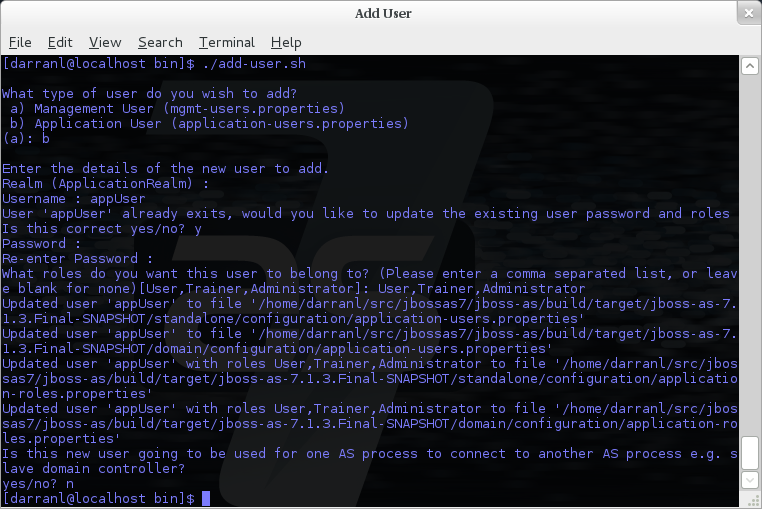

To manipulate the files and add users we provide a utility add-user.sh

and add-user.bat to add the users and generate the hashes, to add a user

you should execute the script and follow the guided process.

The full details of the add-user utility are described later but for the

purpose of accessing the management interface you need to enter the

following values: -

-

Type of user - This will be a 'Management User' to selection option a.

-

Realm - This MUST match the realm name used in the configuration so unless you have changed the configuration to use a different realm name leave this set as 'ManagementRealm'.

-

Username - The username of the user you are adding.

-

Password - The users password.

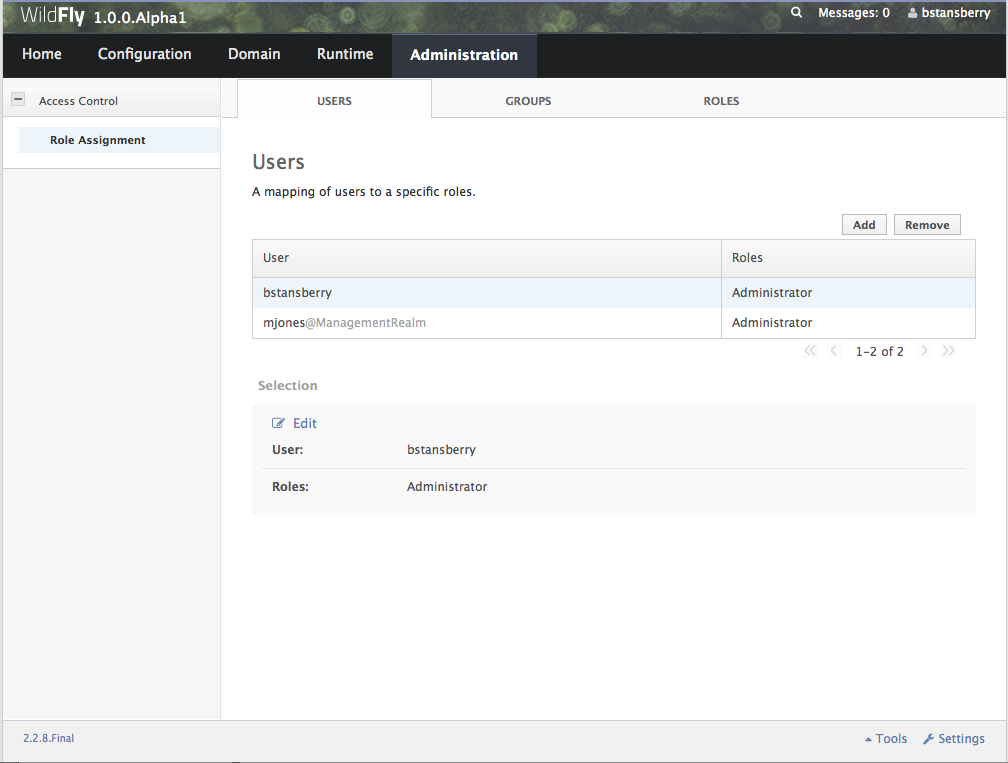

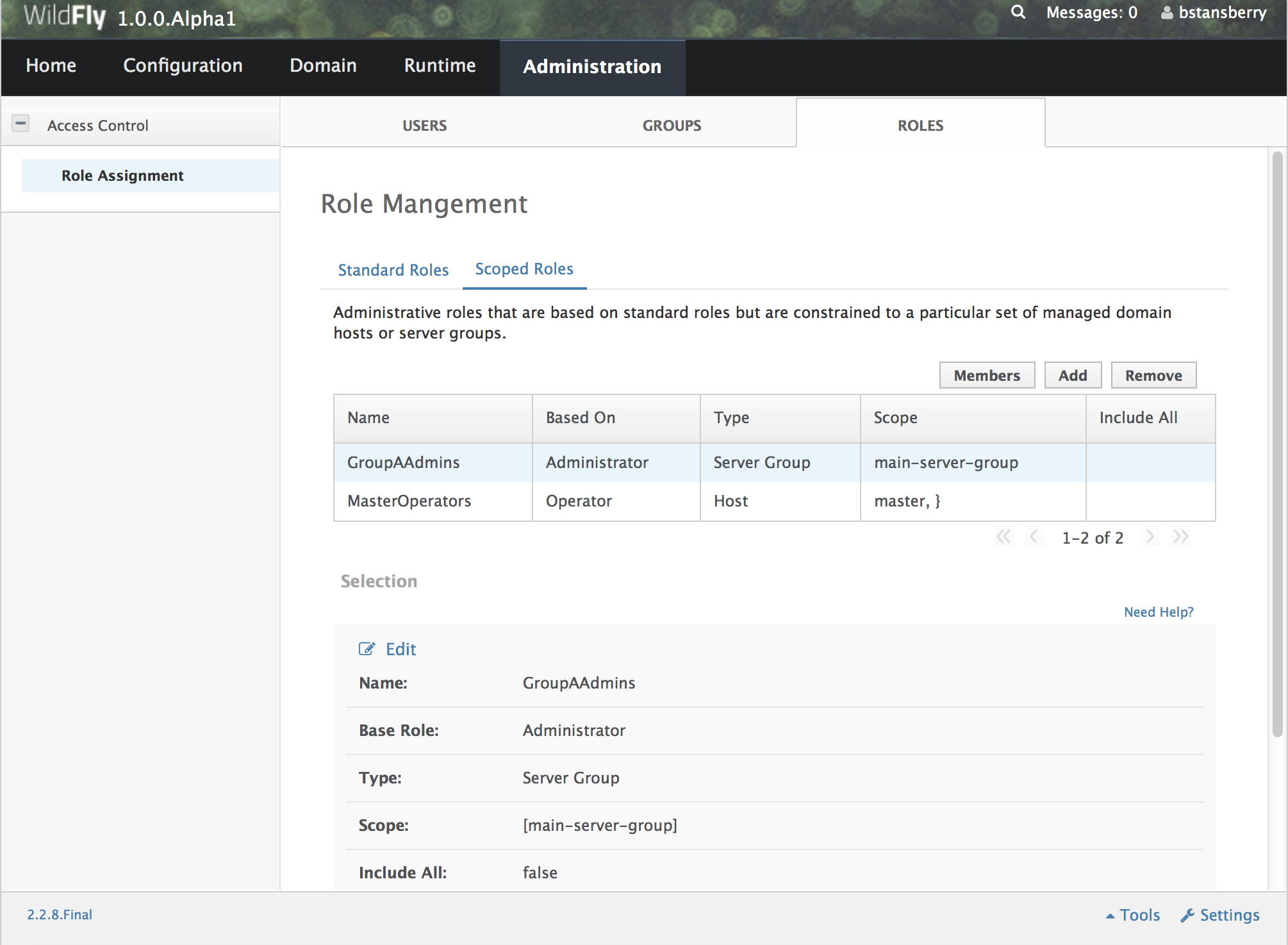

Provided the validation passes you will then be asked to confirm you want to add the user and the properties files will be updated.