© 2017–2022 The original authors.

1. Introduction To High Availability Services

1.1. What are High Availability services?

WildFly’s High Availability services are used to guarantee availability of a deployed Jakarta EE application.

Deploying critical applications on a single node suffers from two potential problems:

-

loss of application availability when the node hosting the application crashes (single point of failure)

-

loss of application availability in the form of extreme delays in response time during high volumes of requests (overwhelmed server)

WildFly supports two features which ensure high availability of critical Jakarta EE applications:

-

fail-over: allows a client interacting with a Jakarta EE application to have uninterrupted access to that application, even in the presence of node failures

-

load balancing: allows a client to have timely responses from the application, even in the presence of high-volumes of requests

| These two independent high availability services can very effectively inter-operate when making use of mod_cluster for load balancing! |

Taking advantage of WildFly’s high availability services is easy, and simply involves deploying WildFly on a cluster of nodes, making a small number of application configuration changes, and then deploying the application in the cluster.

We now take a brief look at what these services can guarantee.

1.2. High Availability through fail-over

Fail-over allows a client interacting with a Jakarta EE application to have uninterrupted access to that application, even in the presence of node failures. For example, consider a Jakarta EE application which makes use of the following features:

-

session-oriented servlets to provide user interaction

-

session-oriented Jakarta Enterprise Beans to perform state-dependent business computation

-

Jakarta Enterprise Beans entity beans to store critical data in a persistent store (e.g. database)

-

SSO login to the application

If the application makes use of WildFly’s fail-over services, a client interacting with an instance of that application will not be interrupted even when the node on which that instance executes crashes. Behind the scenes, WildFly makes sure that all of the user data that the application make use of (HTTP session data, Jakarta Enterprise Beans SFSB sessions, Jakarta Enterprise Beans entities and SSO credentials) are available at other nodes in the cluster, so that when a failure occurs and the client is redirected to that new node for continuation of processing (i.e. the client "fails over" to the new node), the user’s data is available and processing can continue.

The Infinispan and JGroups subsystems are instrumental in providing these data availability guarantees and will be discussed in detail later in the guide.

1.3. High Availability through load balancing

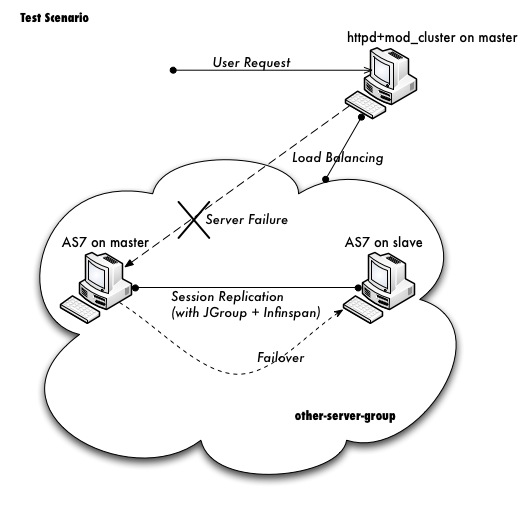

Load balancing enables the application to respond to client requests in a timely fashion, even when subjected to a high-volume of requests. Using a load balancer as a front-end, each incoming HTTP request can be directed to one node in the cluster for processing. In this way, the cluster acts as a pool of processing nodes and the load is "balanced" over the pool, achieving scalability and, as a consequence, availability. Requests involving session-oriented servlets are directed to the the same application instance in the pool for efficiency of processing (sticky sessions). Using mod_cluster has the advantage that changes in cluster topology (scaling the pool up or down, servers crashing) are communicated back to the load balancer and used to update in real time the load balancing activity and avoid requests being directed to application instances which are no longer available.

The mod_cluster subsystem is instrumental in providing support for this High Availability feature of WildFly and will be discussed in detail later in this guide.

1.4. Aims of the guide

This guide aims to:

-

provide a description of the high-availability features available in WildFly and the services they depend on

-

show how the various high availability services can be configured for particular application use cases

-

identify default behavior for features relating to high-availability/clustering

1.5. Organization of the guide

As high availability features and their configuration depend on the particular component they affect (e.g. HTTP sessions, Jakarta Enterprise Beans SFSB sessions, Hibernate), we organize the discussion around those Jakarta EE features. We strive to make each section as self-contained as possible. Also, when discussing a feature, we will introduce any WildFly subsystems upon which the feature depends.

2. Distributable Web Applications

In a standard web application, session state does not survive beyond the lifespan of the servlet container.

A distributable web application allows session state to survive beyond the lifespan of a single server, either via persistence or by replicating state to other nodes in the cluster.

A web application indicates its intention to be distributable via the <distributable/> element within the web application’s deployment descriptor.

e.g.

<web-app xmlns="http://xmlns.jcp.org/xml/ns/javaee"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://xmlns.jcp.org/xml/ns/javaee http://xmlns.jcp.org/xml/ns/javaee/web-app_4_0.xsd"

version="4.0">

<distributable/>

</web-app>2.1. Distributable Web Subsystem

The distributable-web subsystem manages a set of session management profiles that encapsulate the configuration of a distributable session manager. One of these profiles will be designated as the default profile (via the "default-session-management" attribute) and thus defines the default behavior of a distributable web application.

[standalone@embedded /] /subsystem=distributable-web:read-attribute(name=default-session-management)

{

"outcome" => "success",

"result" => "default"

}The default session management stores web session data within an Infinispan cache. We can introspect its configuration:

[standalone@embedded /] /subsystem=distributable-web/infinispan-session-management=default:read-resource

{

"outcome" => "success",

"result" => {

"cache" => undefined,

"cache-container" => "web",

"granularity" => "SESSION",

"affinity" => {"primary-owner" => undefined}

}

}2.1.1. Infinispan session management

The infinispan-session-management resource configures a distributable session manager that uses an embedded Infinispan cache.

- cache-container

-

This references a cache-container defined in the Infinispan subsystem into which session data will be stored.

- cache

-

This references a cache within associated cache-container upon whose configuration the web application’s cache will be based. If undefined, the default cache of the associated cache container will be used.

- granularity

-

This defines how the session manager will map a session into individual cache entries. Possible values are:

- SESSION

-

Stores all session attributes within a single cache entry. This is generally more expensive than ATTRIBUTE granularity, but preserves any cross-attribute object references.

- ATTRIBUTE

-

Stores each session attribute within a separate cache entry. This is generally more efficient than SESSION granularity, but does not preserve any cross-attribute object references.

- affinity

-

This resource defines the affinity that a web request should have for a given server. The affinity of the associated web session determines the algorithm for generating the route to be appended onto the session ID (within the JSESSIONID cookie, or when encoding URLs). This annotation of the session ID is used by load balancers to advise how future requests for existing sessions should be directed. Routing is designed to be opaque to application code such that calls to

HttpSession.getId()always return an unmodified session ID. This is only generated when creating/updating the JSESSIONID cookie, or when encoding URLs viaHttpServletResponse.encodeURL()andencodeRedirectURL(). Possible values are:- affinity=none

-

Web requests will have no affinity to any particular node. This option is intended for use cases where web session state is not maintained within the application server.

- affinity=local

-

Web requests will have an affinity to the server that last handled a request for a given session. This option corresponds to traditional sticky session behavior.

- affinity=primary-owner

-

Web requests will have an affinity to the primary owner of a given session. This is the default affinity for this distributed session manager. Behaves the same as affinity=local if the backing cache is not distributed nor replicated.

- affinity=ranked

-

Web requests will have an affinity to the first available node in a ranked list comprised of: primary owner, backup nodes, local node (if not a primary nor backup owner). Only for use with load balancers that support multiple routes. Behaves the same as affinity=local if cache is not distributed nor replicated.

- marshaller

-

Specifies the marshalling implementation used to serialize session attributes.

- JBOSS

-

Marshals session attributes using JBoss Marshalling.

- PROTOSTREAM

-

Marshals session attributes using ProtoStream.

e.g. Creating a new session management profile, using ATTRIBUTE granularity with local session affinity:

[standalone@embedded /] /subsystem=distributable-web/infinispan-session-management=foo:add(cache-container=web, granularity=ATTRIBUTE)

{

"outcome" => "success"

}

[standalone@embedded /] /subsystem=distributable-web/infinispan-session-management=foo/affinity=local:add(){allow-resource-service-restart=true}

{

"outcome" => "success"

}2.1.2. HotRod session management

The hotrod-session-management resource configures a distributable session manager where session data is stored in a remote infinispan-server cluster via the HotRod protocol.

- remote-cache-container

-

This references a remote-cache-container defined in the Infinispan subsystem into which session data will be stored.

- cache-configuration

-

If a remote cache whose name matches the deployment name does not exist, this attribute defines a cache configuration within the remote infinispan server, from which an application-specific cache will be created.

- granularity

-

This defines how the session manager will map a session into individual cache entries. Possible values are:

- SESSION

-

Stores all session attributes within a single cache entry. This is generally more expensive than ATTRIBUTE granularity, but preserves any cross-attribute object references.

- ATTRIBUTE

-

Stores each session attribute within a separate cache entry. This is generally more efficient than SESSION granularity, but does not preserve any cross-attribute object references.

- affinity

-

This resource defines the affinity that a web request should have for a given server. The affinity of the associated web session determines the algorithm for generating the route to be appended onto the session ID (within the JSESSIONID cookie, or when encoding URLs). This annotation of the session ID is used by load balancers to advise how future requests for existing sessions should be directed. Routing is designed to be opaque to application code such that calls to

HttpSession.getId()always return an unmodified session ID. This is only generated when creating/updating the JSESSIONID cookie, or when encoding URLs viaHttpServletResponse.encodeURL()andencodeRedirectURL(). Possible values are:- affinity=none

-

Web requests will have no affinity to any particular node. This option is intended for use cases where web session state is not maintained within the application server.

- affinity=local

-

Web requests will have an affinity to the server that last handled a request for a given session. This option corresponds to traditional sticky session behavior.

- marshaller

-

Specifies the marshalling implementation used to serialize session attributes.

- JBOSS

-

Marshals session attributes using JBoss Marshalling.

- PROTOSTREAM

-

Marshals session attributes using ProtoStream.

e.g. Creating a new session management profile "foo" using the cache configuration "bar" defined on a remote infinispan server "datagrid" with ATTRIBUTE granularity:

[standalone@embedded /] /subsystem=distributable-web/hotrod-session-management=foo:add(remote-cache-container=datagrid, cache-configuration=bar, granularity=ATTRIBUTE)

{

"outcome" => "success"

}2.2. Overriding default behavior

A web application can override the default distributable session management behavior in 1 of 2 ways:

-

Reference a session-management profile by name

-

Provide deployment-specific session management configuration

2.2.1. Referencing an existing session management profile

To use an existing distributed session management profile, a web application should include a distributable-web.xml deployment descriptor located within the application’s /WEB-INF directory.

e.g.

<?xml version="1.0" encoding="UTF-8"?>

<distributable-web xmlns="urn:jboss:distributable-web:2.0">

<session-management name="foo"/>

</distributable-web>Alternatively, the target distributed session management profile can be defined within an existing jboss-all.xml deployment descriptor:

e.g.

<?xml version="1.0" encoding="UTF-8"?>

<jboss xmlns="urn:jboss:1.0">

<distributable-web xmlns="urn:jboss:distributable-web:2.0">

<session-management name="foo"/>

</distributable-web>

</jboss>2.2.2. Using a deployment-specific session management profile

If custom session management configuration will only be used by a single web application, you may find it more convenient to define the configuration within the deployment descriptor itself. Ad hoc configuration looks identical to the configuration used by the distributable-web subsystem.

e.g.

<?xml version="1.0" encoding="UTF-8"?>

<distributable-web xmlns="urn:jboss:distributable-web:2.0">

<infinispan-session-management cache-container="foo" cache="bar" granularity="SESSION">

<primary-owner-affinity/>

</infinispan-session-management>

</distributable-web>Alternatively, session management configuration can be defined within an existing jboss-all.xml deployment descriptor:

e.g.

<?xml version="1.0" encoding="UTF-8"?>

<jboss xmlns="urn:jboss:1.0">

<distributable-web xmlns="urn:jboss:distributable-web:2.0">

<infinispan-session-management cache-container="foo" cache="bar" granularity="ATTRIBUTE">

<local-affinity/>

</infinispan-session-management>

</distributable-web>

</jboss>2.3. Distributable Shared Sessions

WildFly supports the ability to share sessions across web applications within an enterprise archive.

In previous releases, WildFly always presumed distributable session management of shared sessions.

Version 2.0 of the shared-session-config deployment descriptor was updated to allow an EAR to opt-in to this behavior using the familiar <distributable/> element.

Additionally, you can customize the behavior of the distributable session manager used for session sharing via the same configuration mechanism described in the above sections.

e.g.

<?xml version="1.0" encoding="UTF-8"?>

<jboss xmlns="urn:jboss:1.0">

<shared-session-config xmlns="urn:jboss:shared-session-config:2.0">

<distributable/>

<session-config>

<cookie-config>

<path>/</path>

</cookie-config>

</session-config>

</shared-session-config>

<distributable-web xmlns="urn:jboss:distributable-web:2.0">

<session-management name="foo"/>

</distributable-web>

</jboss>2.4. Optimizing performance of distributed web applications

One of the primary design goals of WildFly’s distributed session manager was the parity of HttpSession semantics between distributable and non-distributable web applications. In order to provide predictable behavior suitable for most web applications, the default distributed session manager configuration is quite conservative, generally favoring consistency over availability. However, these defaults may not be appropriate for your application. In general, the effective performance of the distributed session manager is constrained by:

-

Replication/persistence payload size

-

Locking/isolation of a given session

To optimize the configuration of the distributed session manager for your application, you can address the above constraints by tuning one or more of the following:

2.4.1. Session granularity

By default, WildFly’s distributed session manager uses SESSION granularity, meaning that all session attributes are stored within a single cache entry. While this ensures that any object references shared between session attributes are preserved following replication/persistence, it means that a change to a single attribute results in the replication/persistence of all attributes.

If your application does not share any object references between attributes, users are strongly advised to use ATTRIBUTE granularity. Using ATTRIBUTE granularity, each session attribute is stored in a separate cache entry. This means that a given request is only required to replicate/persist those attributes that were added/modified/removed/mutated in a given request. For read-heavy applications, this can dramatically reduce the replication/persistence payload per request.

2.4.2. Session concurrency

WildFly’s default distributed session manager behavior is also conservative with respect to concurrent access to a given session. By default, a request acquires exclusive access to its associated session for the duration of a request, and until any async child context is complete. This maximizes the performance of a single request, as each request corresponds to a single cache transaction; allows for repeatable read semantics to the session; and ensures that subsequent requests are not prone to stale reads, even when handled by another cluster member.

However, if multiple requests attempt to access the same session concurrently, their processing will be effectively serialized. This might not be feasible, especially for heavily asynchronous web applications.

Relaxing transaction isolation from REPEATABLE_READ to READ_COMMITTED on the associated cache configuration will allow concurrent requests to perform lock-free (but potentially stale) reads by deferring locking to the first attempt to write to the session. This improves the throughput of requests for the same session for highly asynchronous web applications whose session access is read-heavy.

e.g.

/subsystem=infinispan/cache-container=web/distributed-cache=dist/component=locking:write-attribute(name=isolation, value=READ_COMMITTED)For asynchronous web applications whose session access is write-heavy, merely relaxing transaction isolation is not likely to be sufficient. These web applications will likely benefit from disabling cache transactions altogether. When transactions are disabled, cache entries are locked and released for every write to the session, resulting in last-write-wins semantics. For write-heavy applications, this typically improves the throughput of concurrent requests for the same session, at the cost of longer response times for individual requests.

/subsystem=infinispan/cache-container=web/distributed-cache=dist/component=transaction:write-attribute(name=mode, value=NONE)| Relaxing transaction isolation currently prevents WildFly from enforcing that a given session is handled by one JVM at a time, a constraint dictated by the servlet specification. |

2.4.3. Session attribute immutability

In WildFly, distributed session attributes are presumed to be mutable objects, unless of a known immutable type, or unless otherwise specified.

Take the following session access pattern:

HttpSession session = request.getSession();

MutableObject object = session.getAttribute("...");

object.mutate();By default, WildFly replicates/persists the mutable session attributes at the end of the request, ensuring that a subsequent request will read the mutated value, not the original value.

However, the replication/persistence of mutable session attributes at the end of the request happens whether or not these objects were actually mutated.

To avoid redundant session writes, users are strongly encouraged to store immutable objects in the session whenever possible.

This allows the application more control over when session attributes will replicate/persist, since immutable session attributes will only update upon explicit calls to HttpSession.setAttribute(…).

WildFly can determine whether most JDK types are immutable, but any unrecognized/custom types are presumed to be mutable. To indicate that a given session attribute of a custom type should be treated as immutable by the distributed session manager, annotate the class with one of the following annotations:

-

@org.wildfly.clustering.web.annotation.Immutable -

@net.jcip.annotations.Immutable

e.g.

@Immutable

public class ImmutableClass implements Serializable {

// ...

}Alternatively, immutable classes can be enumerated via the distributable-web deployment descriptor.

e.g.

<distributable-web xmlns="urn:jboss:distributable-web:2.0">

<session-management>

<immutable-class>foo.bar.ImmutableClass</immutable-class>

<immutable-class>...</immutable-class>

</session-management>

</distributable-web>2.4.4. Session attribute marshalling

Minimizing the replication/persistence payload for individual session attributes has a direct impact on performance by reducing the number of bytes sent over the network or persisted to storage. See the Marshalling section for more details.

3. Distributable Jakarta Enterprise Beans Applications

Just as with standard web applications, session state of stateful session beans (SFSB) contained in a standard EJB application is not guaranteed to survive beyond the lifespan of the Jakarta Enterprise Beans container. And, as with standard web applications, there is a way to allow session state of SFSBs to survive beyond the lifespan of a single server, either through persistence or by replicating state to other nodes in the cluster.

A distributable SFSB is one whose state is made available on multiple nodes in a cluster and which supports failover of invocation attempts: if the node on which the SFSB was created fails, the invocation will be retried on another node in the cluster where the SFSB state is present.

In the case of Jakarta Enterprise Beans applications, whether or not a bean is distributable is determined globally or on a per-bean basis, rather than on an application-wide basis as in the case of distributed HttpSessions.

A stateful session bean within an Jakarta Enterprise Beans application indicates its intention to be distributable by using a passivation-capable cache to store its session state. Cache factories were discussed in the Jakarta Enterprise Beans section of the Wildfly Admin Guide. Additionally, the EJB application needs to be deployed into a server which uses an High Availability (HA) server profile, such as standalone-ha.xml or standalone-full-ha.xml.

Since Jakarta Enterprise Beans are passivation-capable by default, generally, users already using an HA profile will not need to make any configuration changes for their beans to be distributable and, consequently, to support failover. More fine-grained control over whether a bean is distributable can be achieved using the passivationCapable attribute of the @Stateful annotation (or the equivalent deployment descriptor override). A bean which is marked as @Stateful(passivationCapable=false) will not exhibit distributable behavior (i.e. failover), even when the application containing it is deployed in a cluster.

| More information on passivation-capable beans can be found in Section 4.6.5 of the Jakarta Enterprise Beans specification. |

In the sections that follow, we discuss some aspects of configuring distributable Jakarta Enterprise Beans applications in Wildfly.

3.1. Distributable EJB Subsystem

The purpose of the distributable-ejb subsystem is to permit configuration of clustering abstractions required to support those resources of the ejb3 subsystem which support clustered operation. The key resources of the ejb3 subsystem which require clustering abstractions are:

-

cache factoriesPassivating cache factories depend on a bean management provider to provide passivation and persistence of SFSB session states in a local or distributed environment. -

client mappings registriesSupporting remote invocation on SFSB deployed in a cluster require storing client mappings information in a client mappings registry. The registry may be tailored for a local or a distributed environment.

These clustering abstractions are made available to the ejb3 subsystem via the specification and configuration of clustering abstraction 'providers'. We describe the available providers below.

3.1.1. Bean management providers

A bean management provider provides access to a given implementation of a bean manager, used by passivation-capable cache factories defined in the ejb3 subsystem to manage passivation and persistence.

Bean management provider elements are named, and represent different implementation and configuration choices for bean management.

At least one named bean management provider must be defined in the distributable-ejb subsystem and of those, one

instance must be identified as the default bean management provider, using the default-bean-management attribute of

the distributable-ejb subsystem.

The available bean management provider is:

infinispan-bean-management

The infinispan-bean-management provider element represents a bean manager implementation based on an Infinispan cache. The attributes for the infinispan-bean-manager element are:

- cache-container

-

Specifies a cache container defined in the Infinispan subsystem used to support the session state cache

- cache

-

Specifies the session state cache and its configured properties

- max-active-beans

-

Specifies the maximum number of non-passivated session state entries allowed in the cache

3.1.2. Client mappings registries

A client mappings registry provider provides access to a given implementation of a client mappings registry, used by the EJB client invocation mechanism to store information about client mappings for each node in the cluster. Client mappings are defined in the socket bindings configuration of a server and required to allow an EJB client application to connect to servers which are multi-homed (i.e. clients may access the same server from different networks using a different IP address ad port for each interface on the multi-homed server).

The available client mappings registry providers are:

infinispan-client-mappings-registry

The infinispan-client-mappings-registry provider is a provider based on an Infinispan cache and suitable for a clustered server.

- cache-container

-

Specifies a cache container defined in the Infinispan subsystem used to support the client mappings registry

- cache

-

Specifies the cache and its configured properties used to support the client mappings registry

3.1.3. Timer management

The distributable-ejb subsystem defines a set of timer management resources that define behavior for persistent or non-persistent EJB timers.

To use distributable timer management for EJB timers, one must first disable the existing in-memory mechanisms in the ejb3 subsystem. See Jakarta Enterprise Beans Distributed Timer documentation for details.

infinispan-timer-management

This provider stores timer metadata within an embedded Infinispan cache, and utilizes consistent hashing to distribute timer execution between cluster members.

- cache-container

-

Specifies a cache container defined in the Infinispan subsystem

- cache

-

Specifies the a cache configuration within the specified cache-container

- max-active-timers

-

Specifies the maximum number active timers to retain in memory at a time, after which the least recently used will passivate

- marshaller

-

Specifies the marshalling implementation used to serialize the timeout context of a timer.

- JBOSS

-

Marshals session attributes using JBoss Marshalling.

- PROTOSTREAM

-

Marshals session attributes using ProtoStream.

To ensure proper functioning, the associated cache configuration, regardless of type, should use:

-

BATCH transaction mode

-

REPEATABLE_READ lock isolation

Generally, persistent timers will leverage a distributed or replicated cache configuration if in a cluster, or a local, persistent cache configuration if on a single server; while transient timers will leverage a local, passivating cache configuration.

By default, all cluster members will be eligible for timer execution.

A given cluster member exclude itself from timer execution by using a cache capacity-factor of 0.

3.2. Deploying clustered EJBs

Clustering support is available in the HA profiles of WildFly. In this chapter we’ll be using the standalone server for explaining the details. However, the same applies to servers in a domain mode. Starting the standalone server with HA capabilities enabled, involves starting it with the standalone-ha.xml (or even standalone-full-ha.xml):

./standalone.sh -server-config=standalone-ha.xmlThis will start a single instance of the server with HA capabilities. Deploying the EJBs to this instance doesn’t involve anything special and is the same as explained in the application deployment chapter.

Obviously, to be able to see the benefits of clustering, you’ll need

more than one instance of the server. So let’s start another server with

HA capabilities. That another instance of the server can either be on

the same machine or on some other machine. If it’s on the same machine,

the two things you have to make sure is that you pass the port offset

for the second instance and also make sure that each of the server

instances have a unique jboss.node.name system property. You can do

that by passing the following two system properties to the startup

command:

./standalone.sh -server-config=standalone-ha.xml -Djboss.socket.binding.port-offset=<offset of your choice> -Djboss.node.name=<unique node name>Follow whichever approach you feel comfortable with for deploying the EJB deployment to this instance too.

| Deploying the application on just one node of a standalone instance of a clustered server does not mean that it will be automatically deployed to the other clustered instance. You will have to do deploy it explicitly on the other standalone clustered instance too. Or you can start the servers in domain mode so that the deployment can be deployed to all the server within a server group. See the admin guide for more details on domain setup. |

Now that you have deployed an application with clustered EJBs on both the instances, the EJBs are now capable of making use of the clustering features.

3.2.1. Failover for clustered EJBs

Clustered EJBs have failover capability. The state of the @Stateful @Clustered EJBs is replicated across the cluster nodes so that if one of the nodes in the cluster goes down, some other node will be able to take over the invocations. Let’s see how it’s implemented in WildFly. In the next few sections we’ll see how it works for remote (standalone) clients and for clients in another remote WildFly server instance. Although, there isn’t a difference in how it works in both these cases, we’ll still explain it separately so as to make sure there aren’t any unanswered questions.

3.2.2. Remote standalone clients

In this section we’ll consider a remote standalone client (i.e. a client which runs in a separate JVM and isn’t running within another WildFly 8 instance). Let’s consider that we have 2 servers, server X and server Y which we started earlier. Each of these servers has the clustered EJB deployment. A standalone remote client can use either the JNDI approach or native JBoss EJB client APIs to communicate with the servers. The important thing to note is that when you are invoking clustered EJB deployments, you do not have to list all the servers within the cluster (which obviously wouldn’t have been feasible due the dynamic nature of cluster node additions within a cluster).

The remote client just has to list only one of the servers with the

clustering capability. In this case, we can either list server X (in

jboss-ejb-client.properties) or server Y. This server will act as the

starting point for cluster topology communication between the client and

the clustered nodes.

Note that you have to configure the ejb cluster in the jboss-ejb-client.properties configuration file, like so:

remote.clusters=ejb

remote.cluster.ejb.connect.options.org.xnio.Options.SASL_POLICY_NOANONYMOUS=false

remote.cluster.ejb.connect.options.org.xnio.Options.SSL_ENABLED=false3.2.3. Cluster topology communication

When a client connects to a server, the JBoss EJB client implementation (internally) communicates with the server for cluster topology information, if the server had clustering capability. In our example above, let’s assume we listed server X as the initial server to connect to. When the client connects to server X, the server will send back an (asynchronous) cluster topology message to the client. This topology message consists of the cluster name(s) and the information of the nodes that belong to the cluster. The node information includes the node address and port number to connect to (whenever necessary). So in this example, the server X will send back the cluster topology consisting of the other server Y which belongs to the cluster.

In case of stateful (clustered) EJBs, a typical invocation flow involves creating of a session for the stateful bean, which happens when you do a JNDI lookup for that bean, and then invoking on the returned proxy. The lookup for stateful bean, internally, triggers a (synchronous) session creation request from the client to the server. In this case, the session creation request goes to server X since that’s the initial connection that we have configured in our jboss-ejb-client.properties. Since server X is clustered, it will return back a session id and along with send back an "affinity" of that session. In case of clustered servers, the affinity equals to the name of the cluster to which the stateful bean belongs on the server side. For non-clustered beans, the affinity is just the node name on which the session was created. This affinity will later help the EJB client to route the invocations on the proxy, appropriately to either a node within a cluster (for clustered beans) or to a specific node (for non-clustered beans). While this session creation request is going on, the server X will also send back an asynchronous message which contains the cluster topology. The JBoss EJB client implementation will take note of this topology information and will later use it for connection creation to nodes within the cluster and routing invocations to those nodes, whenever necessary.

Now that we know how the cluster topology information is communicated from the server to the client, let see how failover works. Let’s continue with the example of server X being our starting point and a client application looking up a stateful bean and invoking on it. During these invocations, the client side will have collected the cluster topology information from the server. Now let’s assume for some reason, server X goes down and the client application subsequent invokes on the proxy. The JBoss EJB client implementation, at this stage will be aware of the affinity and in this case it’s a cluster affinity. Because of the cluster topology information it has, it knows that the cluster has two nodes server X and server Y. When the invocation now arrives, it sees that the server X is down. So it uses a selector to fetch a suitable node from among the cluster nodes. The selector itself is configurable, but we’ll leave it from discussion for now. When the selector returns a node from among the cluster, the JBoss EJB client implementation creates a connection to that node (if not already created earlier) and creates a EJB receiver out of it. Since in our example, the only other node in the cluster is server Y, the selector will return that node and the JBoss EJB client implementation will use it to create a EJB receiver out of it and use that receiver to pass on the invocation on the proxy. Effectively, the invocation has now failed over to a different node within the cluster.

3.2.4. Remote clients on another instance of WildFly

So far we discussed remote standalone clients which typically use either the EJB client API or the jboss-ejb-client.properties based approach to configure and communicate with the servers where the clustered beans are deployed. Now let’s consider the case where the client is an application deployed another AS7 instance and it wants to invoke on a clustered stateful bean which is deployed on another instance of WildFly. In this example let’s consider a case where we have 3 servers involved. Server X and Server Y both belong to a cluster and have clustered EJB deployed on them. Let’s consider another server instance Server C (which may or may not have clustering capability) which acts as a client on which there’s a deployment which wants to invoke on the clustered beans deployed on server X and Y and achieve failover.

The configurations required to achieve this are explained in this chapter. As you can see the configurations are done in a jboss-ejb-client.xml which points to a remote outbound connection to the other server. This jboss-ejb-client.xml goes in the deployment of server C (since that’s our client). As explained earlier, the client configuration need not point to all clustered nodes. Instead it just has to point to one of them which will act as a start point for communication. So in this case, we can create a remote outbound connection on server C to server X and use server X as our starting point for communication. Just like in the case of remote standalone clients, when the application on server C (client) looks up a stateful bean, a session creation request will be sent to server X which will send back a session id and the cluster affinity for it. Furthermore, server X asynchronously send back a message to server C (client) containing the cluster topology. This topology information will include the node information of server Y (since that belongs to the cluster along with server X). Subsequent invocations on the proxy will be routed appropriately to the nodes in the cluster. If server X goes down, as explained earlier, a different node from the cluster will be selected and the invocation will be forwarded to that node.

As can be seen both remote standalone client and remote clients on another WildFly instance act similar in terms of failover.

| References in this document to Enterprise JavaBeans (EJB) refer to the Jakarta Enterprise Beans unless otherwise noted. |

4. Messaging

This section is under development intending to describe high availability features and configuration pertaining to Jakarta Messaging (JMS).

5. Load Balancing

5.1. mod_cluster Subsystem

The mod_cluster integration is done via the mod_cluster subsystem.

5.1.1. Configuration

Instance ID or JVMRoute

The instance-id or JVMRoute defaults to jboss.node.name property passed

on server startup (e.g. via -Djboss.node.name=XYZ).

[standalone@localhost:9990 /] /subsystem=undertow:read-attribute(name=instance-id)

{

"outcome" => "success",

"result" => expression "${jboss.node.name}"

}To configure instance-id statically, configure the corresponding property in Undertow subsystem:

[standalone@localhost:9990 /] /subsystem=undertow:write-attribute(name=instance-id,value=myroute)

{

"outcome" => "success",

"response-headers" => {

"operation-requires-reload" => true,

"process-state" => "reload-required"

}

}Proxies

By default, mod_cluster is configured for multicast-based discovery. To specify a static list of proxies, create a remote-socket-binding for each proxy and then reference them in the 'proxies' attribute. See the following example for configuration in the domain mode:

[domain@localhost:9990 /] /socket-binding-group=ha-sockets/remote-destination-outbound-socket-binding=proxy1:add(host=10.21.152.86, port=6666)

{

"outcome" => "success",

"result" => undefined,

"server-groups" => undefined

}

[domain@localhost:9990 /] /socket-binding-group=ha-sockets/remote-destination-outbound-socket-binding=proxy2:add(host=10.21.152.87, port=6666)

{

"outcome" => "success",

"result" => undefined,

"server-groups" => undefined

}

[domain@localhost:9990 /] /profile=ha/subsystem=modcluster/proxy=default:write-attribute(name=proxies, value=[proxy1, proxy2])

{

"outcome" => "success",

"result" => undefined,

"server-groups" => undefined

}

[domain@localhost:9990 /] :reload-servers

{

"outcome" => "success",

"result" => undefined,

"server-groups" => undefined

}Multiple mod_cluster Configurations

Since WildFly 14 mod_cluster subsystem supports multiple named proxy configurations also allowing for registering non-default Undertow servers with the reverse proxies. Moreover, this allows single application server node to register with different groups of proxy servers.

See the following example which adds another Undertow AJP listener, server and a host and adds a new mod_cluster configuration which registers this host using advertise mechanism.

/socket-binding-group=standard-sockets/socket-binding=ajp-other:add(port=8010)

/subsystem=undertow/server=other-server:add

/subsystem=undertow/server=other-server/ajp-listener=ajp-other:add(socket-binding=ajp-other)

/subsystem=undertow/server=other-server/host=other-host:add(default-web-module=root-other.war)

/subsystem=undertow/server=other-server/host=other-host/location=other:add(handler=welcome-content)

/subsystem=undertow/server=other-server/host=other-host:write-attribute(name=alias,value=[localhost]))

/socket-binding-group=standard-sockets/socket-binding=modcluster-other:add(multicast-address=224.0.1.106,multicast-port=23364)

/subsystem=modcluster/proxy=other:add(advertise-socket=modcluster-other,balancer=other-balancer,connector=ajp-other)

reload5.1.2. Runtime Operations

The modcluster subsystem supports several operations:

[standalone@localhost:9990 /] /subsystem=modcluster/proxy=default:read-operation-names

{

"outcome" => "success",

"result" => [

"add",

"add-custom-metric",

"add-metric",

"add-proxy",

"disable",

"disable-context",

"enable",

"enable-context",

"list-proxies",

"read-attribute",

"read-children-names",

"read-children-resources",

"read-children-types",

"read-operation-description",

"read-operation-names",

"read-proxies-configuration",

"read-proxies-info",

"read-resource",

"read-resource-description",

"refresh",

"remove-custom-metric",

"remove-metric",

"remove-proxy",

"reset",

"stop",

"stop-context",

"validate-address",

"write-attribute"

]

}The operations specific to the modcluster subsystem are divided in 3 categories the ones that affects the configuration and require a restart of the subsystem, the one that just modify the behaviour temporarily and the ones that display information from the httpd part.

operations displaying httpd information

There are 2 operations that display how Apache httpd sees the node:

read-proxies-configuration

Send a DUMP message to all Apache httpd the node is connected to and display the message received from Apache httpd.

[standalone@localhost:9990 /] /subsystem=modcluster/proxy=default:read-proxies-configuration

{

"outcome" => "success",

"result" => [

"neo3:6666",

"balancer: [1] Name: mycluster Sticky: 1 [JSESSIONID]/[jsessionid] remove: 0 force: 1 Timeout: 0 Maxtry: 1

node: [1:1],Balancer: mycluster,JVMRoute: 498bb1f0-00d9-3436-a341-7f012bc2e7ec,Domain: [],Host: 127.0.0.1,Port: 8080,Type: http,flushpackets: 0,flushwait: 10,ping: 10,smax: 26,ttl: 60,timeout: 0

host: 1 [example.com] vhost: 1 node: 1

host: 2 [localhost] vhost: 1 node: 1

host: 3 [default-host] vhost: 1 node: 1

context: 1 [/myapp] vhost: 1 node: 1 status: 1

context: 2 [/] vhost: 1 node: 1 status: 1

",

"jfcpc:6666",

"balancer: [1] Name: mycluster Sticky: 1 [JSESSIONID]/[jsessionid] remove: 0 force: 1 Timeout: 0 maxAttempts: 1

node: [1:1],Balancer: mycluster,JVMRoute: 498bb1f0-00d9-3436-a341-7f012bc2e7ec,LBGroup: [],Host: 127.0.0.1,Port: 8080,Type: http,flushpackets: 0,flushwait: 10,ping: 10,smax: 26,ttl: 60,timeout: 0

host: 1 [default-host] vhost: 1 node: 1

host: 2 [localhost] vhost: 1 node: 1

host: 3 [example.com] vhost: 1 node: 1

context: 1 [/] vhost: 1 node: 1 status: 1

context: 2 [/myapp] vhost: 1 node: 1 status: 1

"

]

}read-proxies-info

Send a INFO message to all Apache httpd the node is connected to and display the message received from Apache httpd.

[standalone@localhost:9990 /] /subsystem=modcluster/proxy=default:read-proxies-info

{

"outcome" => "success",

"result" => [

"neo3:6666",

"Node: [1],Name: 498bb1f0-00d9-3436-a341-7f012bc2e7ec,Balancer: mycluster,Domain: ,Host: 127.0.0.1,Port: 8080,Type: http,Flushpackets: Off,Flushwait: 10000,Ping: 10000000,Smax: 26,Ttl: 60000000,Elected: 0,Read: 0,Transfered: 0,Connected: 0,Load: -1

Vhost: [1:1:1], Alias: example.com

Vhost: [1:1:2], Alias: localhost

Vhost: [1:1:3], Alias: default-host

Context: [1:1:1], Context: /myapp, Status: ENABLED

Context: [1:1:2], Context: /, Status: ENABLED

",

"jfcpc:6666",

"Node: [1],Name: 498bb1f0-00d9-3436-a341-7f012bc2e7ec,Balancer: mycluster,LBGroup: ,Host: 127.0.0.1,Port: 8080,Type: http,Flushpackets: Off,Flushwait: 10,Ping: 10,Smax: 26,Ttl: 60,Elected: 0,Read: 0,Transfered: 0,Connected: 0,Load: 1

Vhost: [1:1:1], Alias: default-host

Vhost: [1:1:2], Alias: localhost

Vhost: [1:1:3], Alias: example.com

Context: [1:1:1], Context: /, Status: ENABLED

Context: [1:1:2], Context: /myapp, Status: ENABLED

"

]

}operations that handle the proxies the node is connected too

There are 3 operation that could be used to manipulate the list of Apache httpd the node is connected to.

list-proxies

Displays the httpd that are connected to the node. The httpd could be discovered via the Advertise protocol or via the proxy-list attribute.

[standalone@localhost:9990 subsystem=modcluster] :list-proxies

{

"outcome" => "success",

"result" => [

"proxy1:6666",

"proxy2:6666"

]

}Context related operations

Those operations allow to send context related commands to Apache httpd. They are send automatically when deploying or undeploying webapps.

enable-context

Tell Apache httpd that the context is ready receive requests.

[standalone@localhost:9990 /] /subsystem=modcluster/proxy=default:enable-context(context=/myapp, virtualhost=default-host)

{"outcome" => "success"}Node related operations

Those operations are like the context operation but they apply to all webapps running on the node and operation that affect the whole node.

Configuration

Metric configuration

There are 4 metric operations corresponding to add and remove load metrics to the dynamic-load-provider. Note that when nothing is defined a simple-load-provider is use with a fixed load factor of one.

[standalone@localhost:9990 /] /subsystem=modcluster/proxy=default:read-resource(name=mod-cluster-config)

{

"outcome" => "success",

"result" => {"simple-load-provider" => {"factor" => "1"}}

}that corresponds to the following configuration:

<subsystem xmlns="urn:jboss:domain:modcluster:1.0">

<mod-cluster-config>

<simple-load-provider factor="1"/>

</mod-cluster-config>

</subsystem>Add a metric to the dynamic-load-provider, the dynamic-load-provider in configuration is created if needed.

[standalone@localhost:9990 /] /subsystem=modcluster/proxy=default:add-metric(type=cpu)

{"outcome" => "success"}

[standalone@localhost:9990 /] /subsystem=modcluster/proxy=default:read-resource(name=mod-cluster-config)

{

"outcome" => "success",

"result" => {

"dynamic-load-provider" => {

"history" => 9,

"decay" => 2,

"load-metric" => [{

"type" => "cpu"

}]

}

}

}Remove a metric from the dynamic-load-provider.

[standalone@localhost:9990 /] /subsystem=modcluster/proxy=default:remove-metric(type=cpu)

{"outcome" => "success"}like the add-metric and remove-metric except they require a class parameter instead the type. Usually they needed additional properties which can be specified

[standalone@localhost:9990 /] /subsystem=modcluster/proxy=default:add-custom-metric(class=myclass, property=[("pro1" => "value1"), ("pro2" => "value2")]

{"outcome" => "success"}which corresponds the following in the xml configuration file:

<subsystem xmlns="urn:jboss:domain:modcluster:1.0">

<mod-cluster-config>

<dynamic-load-provider history="9" decay="2">

<custom-load-metric class="myclass">

<property name="pro1" value="value1"/>

<property name="pro2" value="value2"/>

</custom-load-metric>

</dynamic-load-provider>

</mod-cluster-config>

</subsystem>5.1.3. SSL Configuration using Elytron Subsystem

This section provides information how to configure mod_cluster subsystem to protect communication between mod_cluster and load balancer using SSL/TLS using Elytron Subsystem.

Overview

Elytron subsystem provides a powerful and flexible model to configure

different security aspects for applications and the application server

itself. At its core, Elytron subsystem exposes different capabilities to

the application server in order centralize security related

configuration in a single place and to allow other subsystems to consume

these capabilities. One of the security capabilities exposed by Elytron

subsystem is a Client ssl-context that can be used to configure

mod_cluster subsystem to communicate with a load balancer using SSL/TLS.

When protecting the communication between the application server and the

load balancer, you need do define a Client ssl-context in order to:

-

Define a trust store holding the certificate chain that will be used to validate load balancer’s certificate

-

Define a trust manager to perform validations against the load balancer’s certificate

Defining a Trust Store with the Trusted Certificates

To define a trust store in Elytron you can execute the following CLI command:

/subsystem=elytron/key-store=default-trust-store:add(type=PKCS12, relative-to=jboss.server.config.dir, path=application.truststore, credential-reference={clear-text=password})In order to successfully execute the command above you must have a application.truststore file inside your JBOSS_HOME/standalone/configuration directory. Where the trust store is protected by a password with a value password. The trust store must contain the certificates associated with the load balancer or a certificate chain in case the load balancer’s certificate is signed by a CA.

We strongly recommend you to avoid using self-signed certificates with your load balancer. Ideally, certificates should be signed by a CA and your trust store should contain a certificate chain representing your ROOT and Intermediary CAs.

Defining a Trust Manager To Validate Certificates

To define a trust manager in Elytron you can execute the following CLI command:

/subsystem=elytron/trust-manager=default-trust-manager:add(algorithm=PKIX, key-store=default-trust-store)Here we are setting the default-trust-store as the source of the certificates that the application server trusts.

Defining a Client SSL Context and Configuring mod_cluster Subsystem

Finally, you can create the Client SSL Context that is going to be used by the mod_cluster subsystem when connecting to the load balancer using SSL/TLS:

/subsystem=elytron/client-ssl-context=modcluster-client:add(trust-manager=default-trust-manager)Now that the Client ssl-context is defined you can configure

mod_cluster subsystem as follows:

/subsystem=modcluster/proxy=default:write-attribute(name=ssl-context, value=modcluster-client)Once you execute the last command above, reload the server:

reloadUsing a Certificate Revocation List

In case you want to validate the load balancer certificate against a

Certificate Revocation List (CRL), you can configure the trust-manager

in Elytron subsystem as follows:

/subsystem=elytron/trust-manager=default-trust-manager:write-attribute(name=certificate-revocation-list.path, value=intermediate.crl.pem)To use a CRL your trust store must contain the certificate chain in order to check validity of both CRL list and the load balancer`s certificate.

A different way to configure a CRL is using the Distribution Points

embedded in your certificates. For that, you need to configure a

certificate-revocation-list as follows:

/subsystem=elytron/trust-manager=default-trust-manager:write-attribute(name=certificate-revocation-list)5.1.4. Remote User Authentication with Elytron

It is possible to accept a REMOTE_USER already authenticated by the Apache httpd server with Elytron via the AJP protocol.

This can be done by setting up Elytron to secure a WildFly deployment and specifying for the External HTTP mechanism to

be used. This is done by creating a security domain and specifying the External mechanism as one of the mechanism

configurations to be used by the http-authentication-factory:

/subsystem=elytron/http-authentication-factory=web-tests:add(security-domain=example-domain, http-server-mechanism-factory=example-factory,

mechanism-configurations=[{mechanism-name=EXTERNAL}])

Elytron will accept the externally authenticated user and use the specified security domain to perform role mapping to complete authorization.

5.2. Enabling ranked affinity support in load balancer

Enabling ranked affinity support in the server must be accompanied by a compatible load balancer with ranked affinity support enabled. When using WildFly as a load balancer ranked routing can be enabled with the following CLI command:

/subsystem=undertow/configuration=filter/mod-cluster=load-balancer/affinity=ranked:addThe default delimiter which delimiters the node routes is "." which encodes multiple routes as node1.node2.node3.

Should the delimiter be required to be different, this is configurable by the delimiter attribute of the affinity resource.

See the following CLI command:

/subsystem=undertow/configuration=filter/mod-cluster=load-balancer/affinity=ranked:write-attribute(name=delimiter,value=":")5.3. Support for load-balancers relying on affinity cookie

While the Apache family of load-balancers relies on attaching session affinity (routing) information by default to a JSESSIONID cookie or a jsessionid path parameter,

there are other load-balancers that rely on a cookie to drive session affinity.

In order to configure the cookie name and other properties use the following CLI script:

/subsystem=undertow/servlet-container=default/setting=affinity-cookie:add(name=SRV)The affinity can be specified either in the instance-id or by providing jboss.node.name property to the server.

./bin/standalone.sh -c standalone-ha.xml -Djboss.node.name=ribera1For complete documentation on configuring the servlet container refer to Undertow documentation section.

5.3.1. HAProxy

The following is a minimum HAProxy configuration to enable affinity provided by application server to be respected by the given load-balancer.

defaults

timeout connect 5s

timeout client 50s

timeout server 50s

frontend myfrontend

bind 127.0.0.1:8888

default_backend myservers

backend myservers

mode http

cookie SRV indirect preserve

option redispatch

server server1 127.0.0.1:8080 cookie ribera1

server server2 127.0.0.1:8180 cookie ribera2Notice that the proxy server defined cookie names need to correspond with the application server’s instance-id.

6. HA Singleton Features

In general, an HA or clustered singleton is a service that exists on multiple nodes in a cluster, but is active on just a single node at any given time. If the node providing the service fails or is shut down, a new singleton provider is chosen and started. Thus, other than a brief interval when one provider has stopped and another has yet to start, the service is always running on one node.

6.1. Singleton subsystem

WildFly 10 introduced a "singleton" subsystem, which defines a set of policies that define how an HA singleton should behave. A singleton policy can be used to instrument singleton deployments or to create singleton MSC services.

6.1.1. Configuration

The default subsystem configuration from WildFly’s ha and full-ha profile looks like:

<subsystem xmlns="urn:jboss:domain:singleton:1.0">

<singleton-policies default="default">

<singleton-policy name="default" cache-container="server">

<simple-election-policy/>

</singleton-policy>

</singleton-policies>

</subsystem>A singleton policy defines:

-

A unique name

-

A cache container and cache with which to register singleton provider candidates

-

An election policy

-

A quorum (optional)

One can add a new singleton policy via the following management operation:

/subsystem=singleton/singleton-policy=foo:add(cache-container=server)Cache configuration

The cache-container and cache attributes of a singleton policy must reference a valid cache from the Infinispan subsystem. If no specific cache is defined, the default cache of the cache container is assumed. This cache is used as a registry of which nodes can provide a given service and will typically use a replicated-cache configuration.

Election policies

WildFly includes two singleton election policy implementations:

-

simple

Elects the provider (a.k.a. primary provider) of a singleton service based on a specified position in a circular linked list of eligible nodes sorted by descending age. Position=0, the default value, refers to the oldest node, 1 is second oldest, etc. ; while position=-1 refers to the youngest node, -2 to the second youngest, etc.

e.g./subsystem=singleton/singleton-policy=foo/election-policy=simple:add(position=-1) -

random

Elects a random member to be the provider of a singleton service

e.g./subsystem=singleton/singleton-policy=foo/election-policy=random:add()

Preferences

Additionally, any singleton election policy may indicate a preference

for one or more members of a cluster. Preferences may be defined either

via node name or via outbound socket binding name. Node preferences

always take precedent over the results of an election policy.

e.g.

/subsystem=singleton/singleton-policy=foo/election-policy=simple:list-add(name=name-preferences, value=nodeA)

/subsystem=singleton/singleton-policy=bar/election-policy=random:list-add(name=socket-binding-preferences, value=nodeA)Quorum

Network partitions are particularly problematic for singleton services,

since they can trigger multiple singleton providers for the same service

to run at the same time. To defend against this scenario, a singleton

policy may define a quorum that requires a minimum number of nodes to be

present before a singleton provider election can take place. A typical

deployment scenario uses a quorum of N/2 + 1, where N is the anticipated

cluster size. This value can be updated at runtime, and will immediately

affect any active singleton services.

e.g.

/subsystem=singleton/singleton-policy=foo:write-attribute(name=quorum, value=3)6.1.2. Non-HA environments

The singleton subsystem can be used in a non-HA profile, so long as the cache that it references uses a local-cache configuration. In this manner, an application leveraging singleton functionality (via the singleton API or using a singleton deployment descriptor) will continue function as if the server was a sole member of a cluster. For obvious reasons, the use of a quorum does not make sense in such a configuration.

6.2. Singleton deployments

WildFly 10 resurrected the ability to start a given deployment on a single node in the cluster at any given time. If that node shuts down, or fails, the application will automatically start on another node on which the given deployment exists. Long time users of JBoss AS will recognize this functionality as being akin to the HASingletonDeployer, a.k.a. " deploy-hasingleton", feature of AS6 and earlier.

6.2.1. Usage

A deployment indicates that it should be deployed as a singleton via a

deployment descriptor. This can either be a standalone

/META-INF/singleton-deployment.xml file or embedded within an existing

jboss-all.xml descriptor. This descriptor may be applied to any

deployment type, e.g. JAR, WAR, EAR, etc., with the exception of a

subdeployment within an EAR.

e.g.

<singleton-deployment xmlns="urn:jboss:singleton-deployment:1.0" policy="foo"/>The singleton deployment descriptor defines which singleton policy should be used to deploy the application. If undefined, the default singleton policy is used, as defined by the singleton subsystem.

Using a standalone descriptor is often preferable, since it may be

overlaid onto an existing deployment archive.

e.g.

deployment-overlay add --name=singleton-policy-foo --content=/META-INF/singleton-deployment.xml=/path/to/singleton-deployment.xml --deployments=my-app.jar --redeploy-affected6.2.2. Singleton Deployment metrics

The singleton subsystem registers a set of runtime metrics for each singleton deployment installed on the server.

- is-primary

-

Indicates whether the node on which the operation was performed is the primary provider of the given singleton deployment

- primary-provider

-

Identifies the node currently operating as the primary provider for the given singleton deployment

- providers

-

Identifies the set of nodes on which the given singleton deployment is installed.

e.g.

/subsystem=singleton/singleton-policy=foo/deployment=bar.ear:read-attribute(name=primary-provider)6.3. Singleton MSC services

The singleton service facility exposes a mechanism for installing an MSC service such that the service only starts on a single member of a cluster at a time. If the member providing the singleton service is shutdown or crashes, the facility automatically elects a new primary provider and starts the service on that node. In general, a singleton election happens in response to any change of membership, where the membership is defined as the set of cluster nodes on which the given service was installed.

6.3.1. Installing an MSC service using an existing singleton policy

While singleton MSC services have been around since AS7, WildFly adds the ability to leverage the singleton subsystem to create singleton MSC services from existing singleton policies.

The singleton subsystem exposes capabilities for each singleton policy it defines.

These policies, encapsulated by the org.wildfly.clustering.singleton.service.SingletonPolicy interface, can be referenced via the following capability name:

"org.wildfly.clustering.singleton.policy" + policy-name

You can reference the default singleton policy of the server via the name: "org.wildfly.clustering.singleton.default-policy" e.g.

public class MyServiceActivator implements ServiceActivator {

@Override

public void activate(ServiceActivatorContext context) {

ServiceName name = ServiceName.parse("my.service.name");

// Use default singleton policy

Supplier<SingletonPolicy> policy = new ActiveServiceSupplier<>(context.getServiceTarget(), ServiceName.parse(SingletonDefaultRequirement.SINGLETON_POLICY.getName()));

ServiceBuilder<?> builder = policy.get().createSingletonServiceConfigurator(name).build(context.getServiceTarget());

Service service = new MyService();

builder.setInstance(service).install();

}

}6.3.2. Singleton MSC Service metrics

The singleton subsystem registers a set of runtime metrics for each singleton MSC service installed via a given singleton policy.

- is-primary

-

Indicates whether the node on which the operation was performed is the primary provider of the given singleton service

- primary-provider

-

Identifies the node currently operating as the primary provider for the given singleton service

- providers

-

Identifies the set of nodes on which the given singleton service is installed.

e.g.

/subsystem=singleton/singleton-policy=foo/service=my.service.name:read-attribute(name=primary-provider)

6.3.3. Installing an MSC service using dynamic singleton policy

Alternatively, you can configure a singleton policy dynamically, which is particularly useful if you want to use a custom singleton election policy.

org.wildfly.clustering.singleton.service.SingletonPolicy is a generalization of the org.wildfly.clustering.singleton.service.SingletonServiceConfiguratorFactory interface,

which includes support for specifying an election policy, an election listener, and, optionally, a quorum.

The SingletonElectionPolicy is responsible for electing a member to operate as the primary singleton service provider following any change in the set of singleton service providers. Following the election of a new primary singleton service provider, any registered SingletonElectionListener is triggered on every member of the cluster.

The 'SingletonServiceConfiguratorFactory' capability may be referenced using the following capability name: "org.wildfly.clustering.cache.singleton-service-configurator-factory" + container-name + "." + cache-name

You can reference a 'SingletonServiceConfiguratorFactory' using the default cache of a given cache container via the name: "org.wildfly.clustering.cache.default-singleton-service-configurator-factory" + container-name

e.g.

public class MySingletonElectionPolicy implements SingletonElectionPolicy {

@Override

public Node elect(List<Node> candidates) {

// ...

return ...;

}

}

public class MySingletonElectionListener implements SingletonElectionListener {

@Override

public void elected(List<Node> candidates, Node primary) {

// ...

}

}

public class MyServiceActivator implements ServiceActivator {

@Override

public void activate(ServiceActivatorContext context) {

String containerName = "foo";

SingletonElectionPolicy policy = new MySingletonElectionPolicy();

SingletonElectionListener listener = new MySingletonElectionListener();

int quorum = 3;

ServiceName name = ServiceName.parse("my.service.name");

// Use a SingletonServiceConfiguratorFactory backed by default cache of "foo" container

Supplier<SingletonServiceConfiguratorFactory> factory = new ActiveServiceSupplier<>(context.getServiceTarget(), ServiceName.parse(SingletonDefaultCacheRequirement.SINGLETON_SERVICE_CONFIGURATOR_FACTORY.resolve(containerName).getName()));

ServiceBuilder<?> builder = factory.get().createSingletonServiceConfigurator(name)

.electionListener(listener)

.electionPolicy(policy)

.requireQuorum(quorum)

.build(context.getServiceTarget());

Service service = new MyService();

builder.setInstance(service).install();

}

}7. Clustering API

WildFly exposes a public API to deployments for performing common clustering operations, such as:

This zero-dependency API allows an application to perform basic clustering tasks, while remaining decoupled from the libraries that implement WildFly’s clustering logic.

7.1. Group membership

The Group abstraction represents a logical cluster of nodes. The Group service provides the following capabilities:

-

View the current membership of a group.

-

Identifies a designated coordinator for a given group membership. This designated coordinator will be the same on every node for a given membership. Traditionally, the oldest member of the cluster is chosen as the coordinator.

-

Registration facility for notifications of changes to group membership.

WildFly creates a Group instance for every defined channel defined in the JGroups subsystem, as well as a local implementation. The local Group implementation is effectively a singleton membership containing only the current node. e.g.

@Resource(lookup = "java:jboss/clustering/group/ee") // A Group representing the cluster of the "ee" channel

private Group group;

@Resource(lookup = "java:jboss/clustering/group/local") // A non-clustered Group

private Group localGroup;To ensure that your application operates consistently regardless of server configuration, you are strongly recommended to reference a given Group using an alias. Most users should use the "default" alias, which references either:

-

A Group backed by the default channel of the server, if the JGroups subsystem is present

-

A non-clustered Group, if the JGroups subsystem is not present

e.g.

@Resource(lookup = "java:jboss/clustering/group/default")

private Group group;Additionally, WildFly creates a Group alias for every Infinispan cache-container, which references:

-

A Group backed by the transport channel of the cache container

-

A non-clustered Group, if the cache container has no transport

This is useful when using a Group within the context of an Infinispan cache.

e.g.

@Resource(lookup = "java:jboss/clustering/group/server") // Backed by the transport of the "server" cache-container

private Group group;7.1.1. Node

A Node encapsulates a member of a group (i.e. a JGroups address). A Node has the following distinct characteristics, which will be unique for each member of the group:

- getName

-

The distinct logical name of this group member. This value inherently defaults to the hostname of the machine, and can be overridden via the "jboss.node.name" system property. You must override this value if you run multiple servers on the same host.

- getSocketAddress()

-

The distinct bind address/port used by this group member. This will be null if the group is non-clustered.

7.1.2. Membership

A Membership is an immutable encapsulation of a group membership (i.e. a JGroups view). Membership exposes the following properties:

- getMembers()

-

Returns the list of members comprising this group membership. The order of this list will be consistent on all nodes in the cluster.

- isCoordinator()

-

Indicates whether the current member is the coordinator of the group.

- getCoordinator()

-

Returns the member designated as coordinator of this group. This methods will return a consistent value for all nodes in the cluster.

7.1.3. Usage

The Group abstract is effectively a volatile reference to the current membership, and provides a facility for notification of membership changes. It exposes the following properties and operations:

- getName()

-

The logical name of this group.

- getLocalMember()

-

The Node instance corresponding to the local member.

- getMembership()

-

Returns the current membership of this group.

- register(GroupListener)

-

Registers the specific listener to be notified of changes to group membership.

- isSingleton()

-

Indicates whether the groups membership is non-clustered, i.e. will only ever contain a single member.

7.1.4. Example

A distributed "Hello world" example that prints joiners and leavers of a group membership:

public class MyGroupListener implements GroupListener {

@Resource(lookup = "java:jboss/clustering/group/default") (1)

private Group group;

private Registration<GroupListener> listenerRegistration;

@PostConstruct

public void init() {

this.listenerRegistration = this.group.register(this);

System.out.println("Initial membership: " + this.group.getMembership().getMembers());

}

@PreDestroy

public void destroy() {

this.listenerRegistration.close(); (2)

}

@Override

public void membershipChanged(Membership previous, Membership current, boolean merged) {

List<Node> previousMembers = previous.getMembers();

List<Node> currentMembers = current.getMembers();

List<Node> joiners = currentMembers.stream().filter(member -> !previousMembers.contains(member)).collect(Collectors.toList());

if (!joiners.isEmpty()) {

System.out.println("Welcome: " + joiners);

}

List<Node> leavers = previousMembers.stream().filter(member -> !currentMembers.contains(member)).collect(Collectors.toList());

if (!leavers.isEmpty()) {

System.out.println("Goodbye: " + leavers);

}

}

}| 1 | Injects the default Group of the server |

| 2 | Make sure to close your listener registration! |

7.2. Command Dispatcher

A command dispatcher is a mechanism for dispatching commands to be executed on members of a group.

7.2.1. CommandDispatcherFactory

A command dispatcher is created from a CommandDispatcherFactory, an instance of which is created for every defined channel defined in the JGroups subsystem, as well as a local implementation. e.g.

@Resource(lookup = "java:jboss/clustering/dispatcher/ee") // A command dispatcher factory backed by the "ee" channel

private CommandDispatcherFactory factory;

@Resource(lookup = "java:jboss/clustering/dispatcher/local") // The non-clustered command dispatcher factory

private CommandDispatcherFactory localFactory;To ensure that your application functions consistently regardless of server configuration, we recommended that you reference the CommandDispatcherFactory using an alias. Most users should use the "default" alias, which references either:

-

A CommandDispatcherFactory backed by the default channel of the server, if the JGroups subsystem is present

-

A non-clustered CommandDispatcherFactory, if the JGroups subsystem is not present

e.g.

@Resource(lookup = "java:jboss/clustering/dispatcher/default")

private CommandDispatcherFactory factory;Additionally, WildFly creates a CommandDispatcherFactory alias for every Infinispan cache-container, which references:

-

A CommandDispatcherFactory backed by the transport channel of the cache container

-

A non-clustered CommandDispatcherFactory, if the cache container has no transport

This is useful in the case where a CommandDispatcher is used to communicate with members on which a given cache is deployed.

e.g.

@Resource(lookup = "java:jboss/clustering/dispatcher/server") // Backed by the transport of the "server" cache-container

private CommandDispatcherFactory factory;7.2.2. Command

A Command encapsulates logic to be executed on a group member. A Command can leverage 2 type of parameters during execution:

- Sender supplied parameters

-

These are member variables of the Command implementation itself, and are provided during construction of the Command object. As properties of a serializable object, these must also be serializable.

- Receiver supplied parameters, i.e. local context

-

These are encapsulated in a single object, supplied during construction of the CommandDispatcher. The command dispatcher passes the local context as a parameter to the Command.execute(…) method.

7.2.3. CommandDispatcher

The CommandDispatcherFactory creates a CommandDispatcher using a service identifier and a local context. This service identifier is used to segregate commands from multiple command dispatchers. A CommandDispatcher will only receive commands dispatched by a CommandDispatcher with the same service identifier.

Once created, a CommandDispatcher will locally execute any received commands until it is closed. Once closed, a CommandDispatcher is no longer allowed to dispatch commands.

The functionality of a CommandDispatcher boils down to 2 operations:

- executeOnMember(Command, Node)

-

Executes a given command on a specific group member.

- executeOnGroup(Command, Node…)

-

Executes a given command on all members of the group, optionally excluding specific members

Both methods return responses as a CompletionStage, allowing for asynchronous processing of responses as they complete.

7.2.4. Example